Train Models Faster with NVIDIA Cloud GPU for Deep Learning on Hyperstack

Powered by 100% renewable energy

Benefits Of Cloud GPU for Deep Learning

Hyperstack offers access to the best NVIDIA GPUs for deep learning, essential in DL research and applications due to their powerful parallel processing capabilities.

SLURM INTEGRATION:

SLURM integration in our Deep Learning solutions offers streamlined job scheduling and workload management, ensuring optimal utilisation of resources for faster, more efficient deep learning model training.

KUBERNETES COMPATIBILITY:

With Kubernetes compatibility, our Deep Learning platform enhances orchestration and automation of containerised applications, providing scalable and resilient infrastructure to handle complex deep learning tasks seamlessly.

High-performance GPUs:

Manage extensive data and execute intricate calculations needed for neural networks, resulting in substantial acceleration in training and inference.

Deep learning libraries:

GPUs optimised for DL frameworks—TensorFlow, PyTorch, and MXNet—harness GPU parallelism for faster neural network training.

AI Software Stack:

NVIDIA offers CUDA (Compute Unified Device Architecture) and cuDNN (CUDA Deep Neural Network Library), finely tuned for GPU computing. These libraries streamline analytics and ML workflows.

Real-time Inference:

GPUs slash data processing time for big data analytics, which is vital in finance, healthcare, and science.

Deep Learning Training

Deep Learning Inference

Dev & DevOps tools

Deep Learning Solutions

Training Tools

- DALI (Data Loading Library) is a GPU-accelerated library for optimising data pipelines in deep learning by enhancing data augmentation and image loading.

- cuDNN (CUDA Deep Neural Network library) optimises implementations for Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), simplifying model creation and training.

- NCCL (NVIDIA Collective Communications Library) accelerates multi-GPU communication using routines like all-gather, reduce, and broadcast, scaling up to eight GPUs.

- NVIDIA Clara is an AI platform for healthcare, integrating medical image analysis, data processing, and AI-assisted solutions, all leveraging deep learning.

Inference Tools

- NVIDIA TensorRT is an SDK for accelerated deep learning inference, offering an optimiser and runtime for low-latency, high-throughput inference.

- DeepStream SDK is a toolkit for multi-sensor processing and AI-driven video/image comprehension.

- NVIDIA Triton Inference Server is open-source software for serving DL models, optimising GPU use, and integrated with Kubernetes for orchestration, metrics, and auto-scaling.

- NVIDIA Riva is a unified AI SDK for vision, speech, and sensors. Streamlines building, training, and deploying GPU-accelerated systems for cohesive gesture, gaze, and speech interpretation.

Dev & DevOps Tools

- Nsight Systems is a performance analysis tool that identifies major optimisation prospects and tunes for efficient scaling across any CPU/GPU count or size.

- DLProf (Deep Learning Profiler) is a profiling tool displaying GPU utilisation, Tensor Core-supported operations, and their execution usage.

- Kubernetes with NVIDIA GPUs scale training and inference on multi-cloud GPU clusters. Packaged GPU apps and dependencies deploy via Kubernetes for optimal GPU performance in any environment.

- FME (Feature Map Explorer) offers to visualise 4D image feature map data through diverse views, from channel visualisations to detailed numerical data on the entire feature map tensor and channel slices.

Use Cases

Classify images for self-driving cars, medical imaging diagnosis, and others

Detect fraud by identifying fraudulent activities in financial transactions

Game development, like character animation and enhancing graphics

Analyse vast amounts of data to improve climate models and predict weather patterns

Analyse financial data to predict market trends, assess risk, and optimise investment strategies

Generate new content, such as images, music, and text

NLP tasks like sentiment analysis, language translation, chatbots, and speech recognition

GPUs we Recommend for Deep Learning

For rendering solutions, Hyperstack proposes to speed up your laptop with the following family of cloud GPUs by NVIDIA:

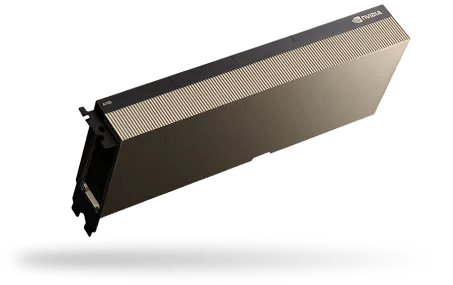

A100

- VFX (Visual effects), animation, video editing, and post-production

- Architectural and scientific visualization

- Virtual reality and augmented reality

- Complex CAD and product design

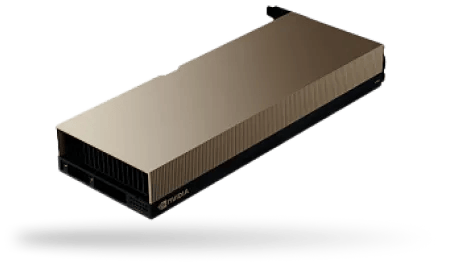

H100 PCIe

- Live 3D and VFX rendering

- Complex CAD and manufacturing product design

- Virtual reality and augmented reality

- Complex CAD and product design

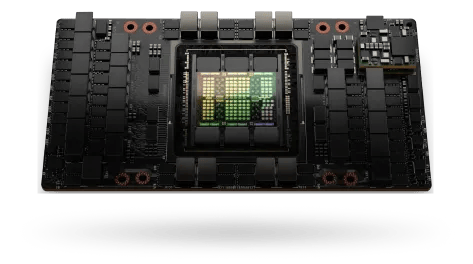

H100 SXM

- Impressive performance gains

- More and faster cores

- Larger cache

- Increased memory bandwidth

Frequently Asked Questions

We build our services around you. Our product support and product development go hand in hand to deliver you the best solutions available.

Which GPU is best for Deep Learning?

We recommend using the A100 or H100 GPUs for Deep Learning workloads.

How to use cloud GPU for Deep Learning?

To use a cloud GPU for machine learning on HyperStack, you need to:

1. Create your first environment

The first step is to create an environment. Every resource such as keypairs, virtual machines, volumes live in an environment.

To create an environment, simply input the name of your environment and select the region in which you want to create your environment.

2. Import your first keypair

The next step is to import a public key that you'll use access your virtual machine via SSH. You'll need to generate an SSH key on your system first.

Then to import a keypair, simply select an environment in which you want to store the key pair in, enter a memorable name for your keypair, and enter the public key of your SSH keypair.

3. Create your virtual machine

We're finally here. Now that you've created your environment and keypair, we can proceed to create an virtual machine.

To create your first virtual machine, select the environment where you want to create your virtual machine in, select a flavor which is nothing but the specs of your virtual machine, select the OS image of your choice, enter a memorable name for your virtual machine, select the SSH key you want to use to access your virtual machine and then hit the "Deploy" button. Voila, your virtual machine is created.

To learn more please visit Hyperstack’s Documentation.

Do you need a powerful GPU for machine learning?

Yes, a powerful GPU significantly speeds up training and inference for machine learning tasks. However, simpler tasks can be done with less powerful GPUs or even CPUs.

What is the cost of a Deep Learning GPU?

Our budget-friendly GPU for Deep Learning starts at $ 2.20 per hour on Hyperstack. Check out our cloud GPU pricing here.

Is cloud deep learning GPU good for NLP tasks?

Yes. Cloud deep learning GPUs are good for NLP tasks like sentiment analysis, language translation, chatbots, and speech recognition.

Who offers reliable cloud GPUs for deep learning projects?

Hyperstack is a trusted platform offering high-performance GPUs such as A100 and H100 tailored for deep learning. With enterprise-grade infrastructure and low-latency networking, it's ideal for researchers and production teams alike.

Does training deep learning models on cloud GPUs save time?

Yes. Deep learning workloads benefit immensely from parallel processing and large GPU memory, which accelerates training.

See What Our Customers Think...

“This is the fastest GPU service I have ever used.”

Anonymous user

You guys rock!! You have NO IDEA how badly I need a solid GPU cloud provider. AWS/Azure are literally only for enterprise clients at this point, it's impossible to build a highly technical startup and get hit with their ridiculous egrees fees. You guys have excellent latency all the way down here to Atlanta from CA.

By far the most important aspect of a cloud provider, only second to cost/quality ratio, is their API. The UI/UX of the console is extremely well designed and I appreciate the quality. So, I’ll be diving into your API deeply. Other GPU providers don’t offer a programmatic way of creating OS images, so the fact that you do is key for me.

Anonymous user