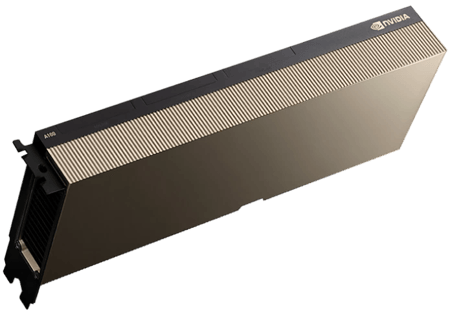

NVIDIA A100

Rent NVIDIA A100 GPU – Experience a 20x Performance Leap

The NVIDIA 80GB A100 Tensor Core GPU delivers unprecedented acceleration for the most demanding AI, data analytics, and HPC workloads. With up to 20x the performance of the previous generation and one of the world's fastest memory bandwidths, the NVIDIA A100 can handle even the largest models and datasets with ease. Available on-demand through Hyperstack!

Unrivalled Performance in…

AI Training & Inference

Up to 20x faster than previous generations, crush demanding workloads in AI, analytics, and HPC

Data Analytics

Doubled memory (80GB) and world-record 2TB/s bandwidth tackle massive datasets and complex models

Precision

Handle diverse workloads with a single accelerator, supporting a broad range of math formats

Scalability

Scale up or down, and adapt to dynamic workloads with ease

NVIDIA A100 80GB

Pricing starts from $0.95/hour

Redefine GPU Performance with NVIDIA A100 80GB

AI and Supercomputing Performance

The NVIDIA A100 takes the Ampere architecture to the extreme, delivering unmatched processing power for AI, data science, and HPC workloads. It provides a massive 20x increase in Tensor FLOPS for deep learning over previous-gen GPUs along with advanced support for sparse models and datasets. Combined with NVLink and structural sparsity acceleration, you get breakthrough performance for training and deploying immense neural networks

Optimised Versatility

This GPU also introduces powerful new technologies to optimise utilisation in data centres. With its Multi-Instance GPU capabilities, a single A100 can be partitioned into separate entities for right-size acceleration. Whether tackling enormous distributed jobs or tiny tasks, the A100 allows every user to leverage its industry-leading capabilities efficiently.

Benefits of NVIDIA A100

Unmatched Versatility

Powered by NVIDIA Ampere architecture, the A100 adapts to your needs. Connect multiple GPUs with NVLink for massive tasks. Maximise the potential of every GPU in your data centre, 24/7.

Fast Deep Learning

Experience 20x the performance of previous generations with 3rd-gen Tensor Cores. The A100 delivers 312 teraflops for training and inference, accelerating your deep learning work like never before.

Breakthrough Interconnection

The A100's NVLink, paired with NVSwitch, connects up to 16 GPUs at 600GB/s, creating the ultimate single-server performance platform.

World-Record Memory Bandwidth

A100 GPU Memory boasts up to 80GB of HBM2e, reaching a groundbreaking 2TB/s bandwidth – the first of its kind. Enjoy 1.7x higher bandwidth than previous generations and 95% DRAM utilisation efficiency.

Multi-Instance Flexibility

Optimise resource allocation for every task, expand access for all users, and find a new era of acceleration.

AI-Driven Insights at Scale

The A100 enables real-time decision-making and complex data analysis across various industries, transforming the way businesses operate and innovate in an AI-accelerated world.

Technical Specifications

Available on

Hyperstack

Frequently Asked Questions

Frequestly asked questions about the NVIDIA A100.

What is the NVIDIA A100 80GB GPU card used for?

NVIDIA A100 80 GB are robust GPUs specifically designed for AI tasks like training large language models as they handle massive datasets and complex calculations needed to train AI models for tasks like writing, translating, and generating code. For running AI applications, once trained, A100s power AI applications like image recognition and speech-to-text.

What type of memory is NVIDIA A100?

The NVIDIA A100 GPU in the cloud uses high-bandwidth HBM2 memory. It allows for fast data transfer between the GPU and memory, crucial for AI tasks.

What is the current GPU A100 price?

On Hyperstack, the NVIDIA A100 costs $1.35/hr.

How easy is it to set up and use the NVIDIA A100?

With Hyperstack, spinning up an A100 is easily done in minutes - it’s just a few clicks away. Sign in and explore for free to see for yourself!

How much watt does each NVIDIA A100 GPU consume?

Each A100 GPU consumes approximately 300W.

What is the hourly cost of using an NVIDIA A100 GPU on-demand?

The hourly cost of NVIDIA A100 GPU on-demand is $1.35/hr on Hyperstack.

Which platforms offer the lowest on-demand pricing for NVIDIA A100 GPUs?

Hyperstack offers one of the most competitive prices at $1.35/hour for NVIDIA A100 GPUs.

How can I rent the NVIDIA A100 GPU?

You can rent the NVIDIA A100 GPU by accessing our console here.

Is the A100 80GB good for LLM training?

Yes. Its high memory bandwidth and large 80GB VRAM make it excellent for mid- to large-scale LLM training and multi-GPU scaling.