TABLE OF CONTENTS

Key Takeaways

-

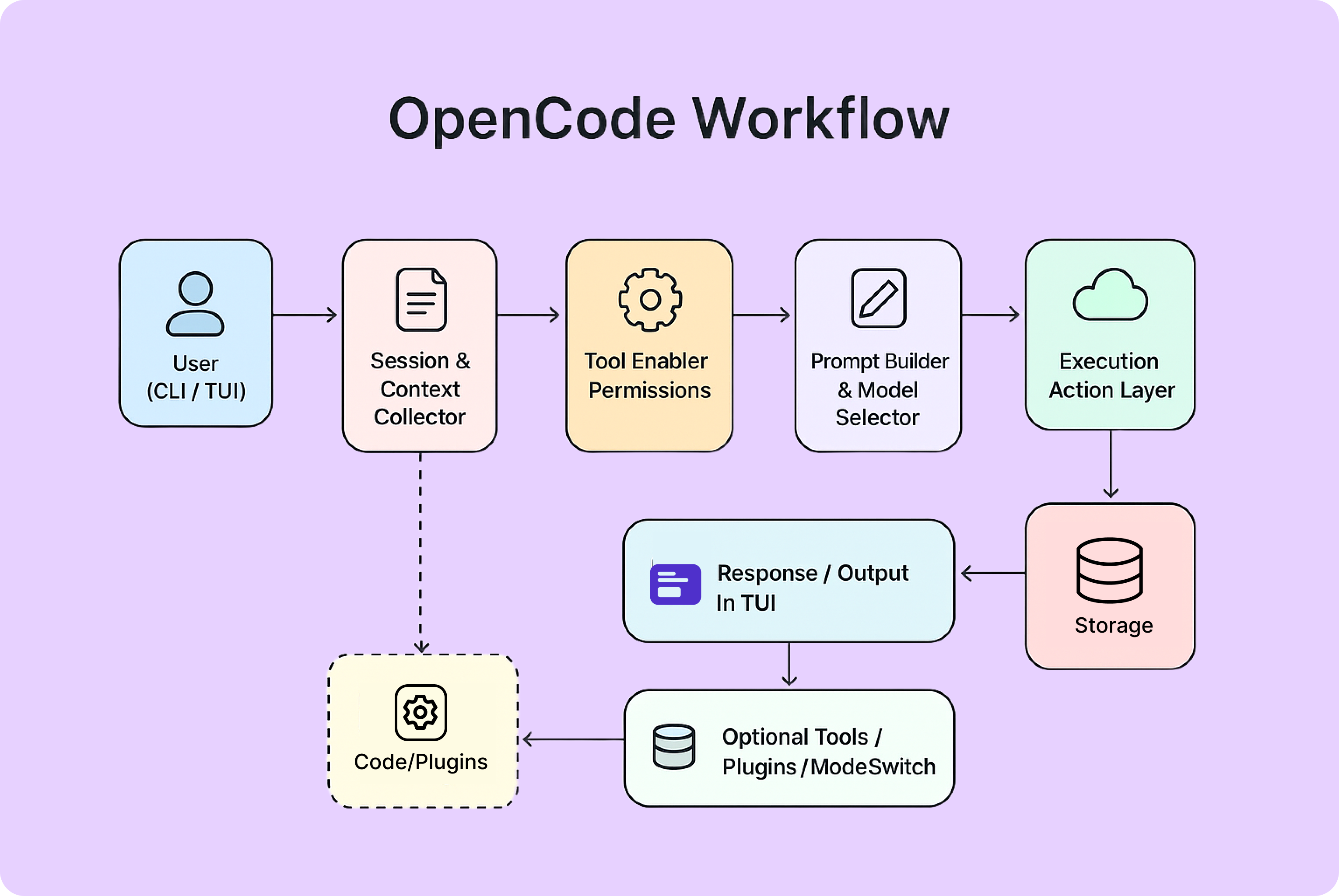

OpenCode provides an open-source environment for building and running LLM-powered workflows through a CLI or interactive interface.

-

Hyperstack AI Studio offers a managed platform for deploying and serving large language models via an OpenAI-compatible API.

-

Hyperstack’s API compatibility allows it to be integrated into OpenCode with minimal configuration changes.

-

The setup process includes installing OpenCode, generating a Hyperstack API key, and configuring a model endpoint.

-

After authentication, OpenCode can send prompts to Hyperstack models and receive responses for validation.

-

The integration enables developers to use custom or fine-tuned Hyperstack models within OpenCode workflows.

The rapid growth of large language models (LLMs) has transformed how developers build, test, and deploy intelligent applications. Instead of relying solely on fixed APIs, engineers now have the flexibility to integrate custom models into their workflows, enabling faster iteration and deeper customisation.

Two tools that play a significant role in this transformation are OpenCode and Hyperstack AI Studio.

Understanding OpenCode

What is OpenCode?

OpenCode is an open-source AI development platform that allows developers to build, deploy, and manage LLM-powered applications seamlessly. It provides a unified interface to interact with multiple AI providers, execute prompts, and run agents all from the command line or through an interactive studio environment.

Built with extensibility in mind, OpenCode supports OpenAI-compatible APIs, meaning developers can plug in custom or third-party endpoints, such as those hosted on Hyperstack, without needing to modify existing code.

Why use OpenCode?

OpenCode simplifies AI application development through:

- Unified Interface: Manage and run LLMs from multiple providers in one consistent environment.

- Extensibility: Easily integrate OpenAI-compatible APIs, including private endpoints like Hyperstack.

- Interactive Studio: Provides a terminal-based chat and development experience for quick experimentation.

- Agent Support: Allows building AI agents capable of reasoning and executing structured tasks.

- Developer Friendly: Works cross-platform and integrates with popular package managers like npm and Homebrew.

With OpenCode, you can rapidly test prompts, build LLM-backed workflows, and connect with your preferred model providers — including custom or fine-tuned models hosted on Hyperstack AI Studio.

You can learn more about OpenCode from its official documentation.

Introduction to Hyperstack AI Studio

What is Hyperstack AI Studio?

Hyperstack AI Studio is a fully managed generative AI platform that provides everything needed to train, fine-tune, evaluate, and deploy large language models. It enables developers and enterprises to customize LLMs without worrying about infrastructure management.

Hyperstack’s API is OpenAI SDK compatible, which means any client or tool that works with OpenAI’s API can seamlessly integrate with Hyperstack — including OpenCode.

This compatibility allows developers to use Hyperstack-hosted or fine-tuned models directly inside OpenCode with only minimal configuration.

You can browse available base models in the Hyperstack documentation.

Why use Hyperstack AI Studio

Hyperstack AI Studio is designed to provide the flexibility and power needed for modern AI development. Here are a few key benefits:

- OpenAI-compatible API: Works seamlessly with tools like OpenCode, LangChain, or custom applications.

- Model variety: Choose from a catalog of open-source base models, including the popular

openai/gpt-oss-120b. - Custom fine-tuning: Train models on proprietary data for domain-specific applications.

- Evaluation framework: Benchmark fine-tuned models using built-in evaluation tools.

- Playground: Interactively test and refine prompts before production deployment.

- Scalable and cost-effective: Optimized for performance with GPU-backed infrastructure.

- Secure and private: Data isolation ensures that your proprietary data remains protected.

- Easy deployment: Export models as OpenAI-compatible endpoints ready for integration.

Hyperstack serves as the model layer, while OpenCode acts as the application interface — together, they provide an efficient and customizable AI development stack.

Why Hyperstack AI Studio is Perfect for OpenCode

Integrating Hyperstack AI Studio with OpenCode brings a powerful combination for developers looking to build intelligent applications. Here’s a quick comparison of their features:

| Aspect | Hyperstack AI Studio | OpenCode |

|---|---|---|

| Function | LLM hosting, fine-tuning, evaluation | LLM client, interface, agent runner |

| API Compatibility | OpenAI-compatible endpoints | Supports OpenAI schema |

| Customization | Fine-tuned models on proprietary data | Connects to multiple backends |

| Scalability | GPU-backed inference infrastructure | Lightweight local runtime |

| Testing | Interactive Playground | Command-line and Studio testing |

In simple terms, Hyperstack provides the intelligence, and OpenCode provides the interface.

By integrating the two, you can use your fine-tuned Hyperstack models directly in OpenCode’s AI workflows — achieving full control over both inference and customization.

Integration of Hyperstack AI Studio with OpenCode

Now that we understand the importance of both tools, let’s walk through how to connect them step by step.

Step 1: Install OpenCode

If you haven’t already installed OpenCode, there are multiple installation methods available.

Option 1: Install via Homebrew (macOS / Linux)

The simplest way to install OpenCode on macOS or Linux is through Homebrew:

# Install the latest version of OpenCode using Homebrew

brew install sst/tap/opencode

This will start the installation process by fetching the necessary files and dependencies.

Option 2: Install via npm (Cross-Platform)

Another way to install OpenCode is using npm, which works on any platform with Node.js installed:

# Install OpenCode globally via npm

npm install -g opencode-ai

Just like homebrew, this command will also start pulling the required packages and setting up OpenCode on your system.

Great! Now that you have OpenCode installed, we can start configuring it to use Hyperstack AI Studio as the backend for LLM inference.

Step 2: Retrieve Hyperstack API Details

Before we perform the installation steps of opencode, we have to get some info that later we will be using to configure our Hyperstack LLM with opencode like base url, model id, api key and so on.

- Go to the Hyperstack Console and log in with your credentials.

- Navigate to the AI Studio Playground to explore available models before integration them with Cursor.

In the playground, Select your desired model after quick testing it on the interface. We are going with openai/gpt-oss-120b for this integration.

Then click on the API section to get the Base URL and Model ID.

You can check the available models on their base model documentation page. You can copy the model id and base url from here, we will need it in the next step.

Step 3: Generating an API Key

To authenticate, we will need a valid API key from Hyperstack AI Studio.

-

Go to the API Keys section in the Hyperstack console.

-

Click Generate New Key.

-

Give it a name (e.g.,

opencode-integration-key). -

Copy the generated key, we will use it in opencode module.

Now that we have the required details for opencode, let's now use them.

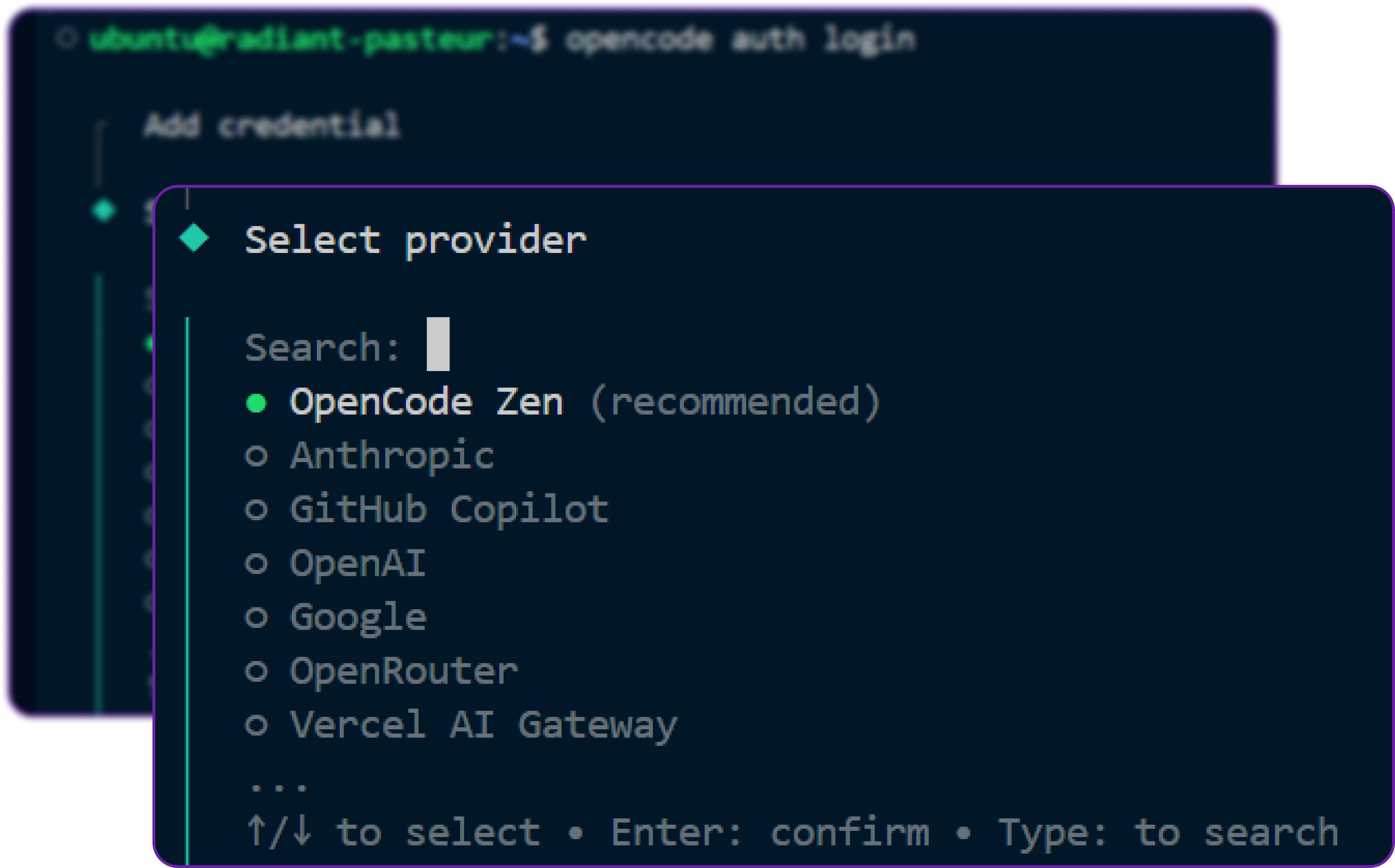

Step 4: Configure OpenCode Authentication

Next, we will authenticate OpenCode with Hyperstack as a provider.

The very first thing we need to do is log in to OpenCode authentication that will list down all the auth providers that are supported by OpenCode.

# Log in to OpenCode authentication

opencode auth login

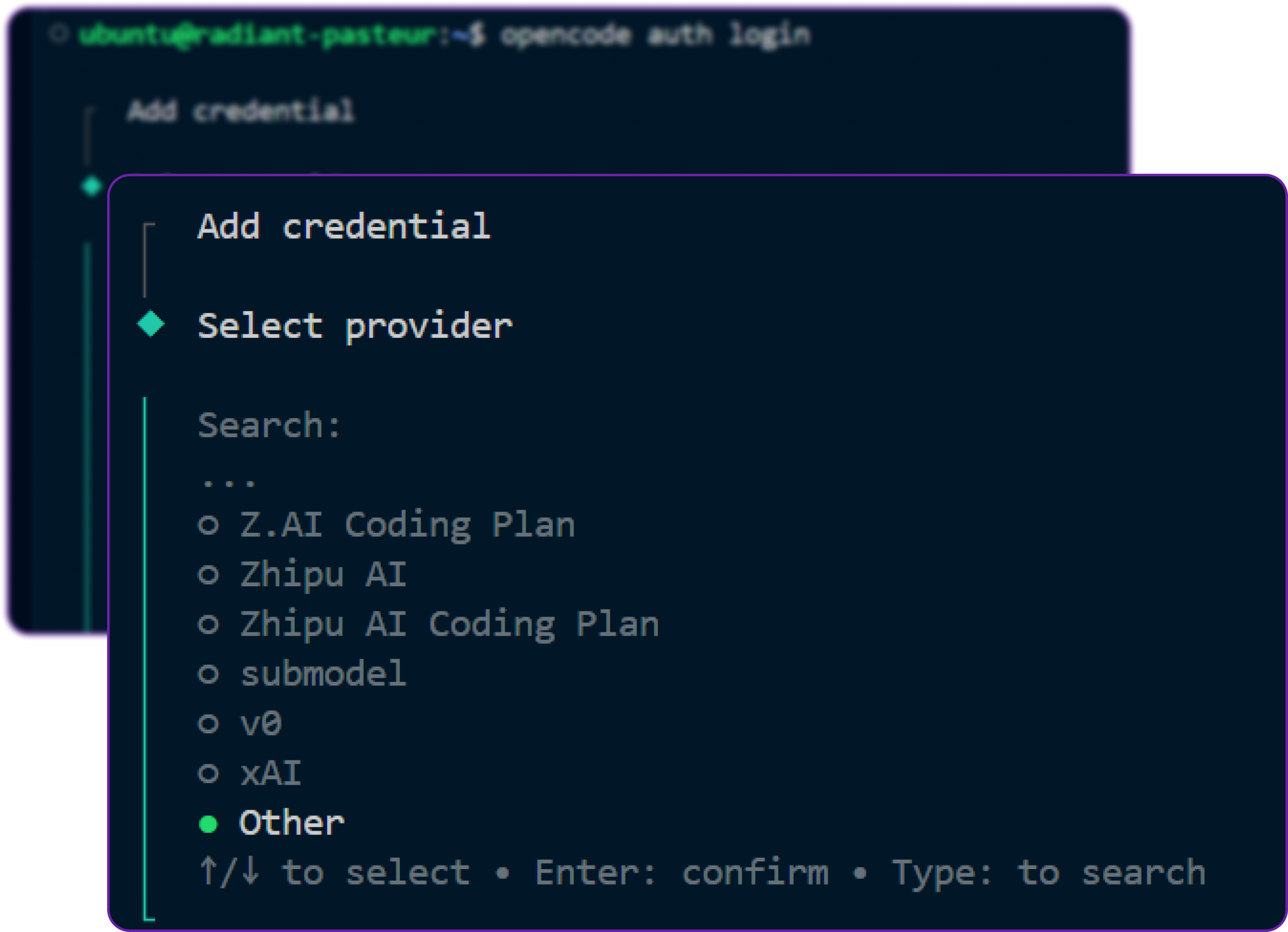

You will see a list of available authentication providers. You have to scroll down to the bottom of the list and select “Other” to add a custom provider.

Scroll down to "Other" section because there we will select our own custom provider Hyperstack.

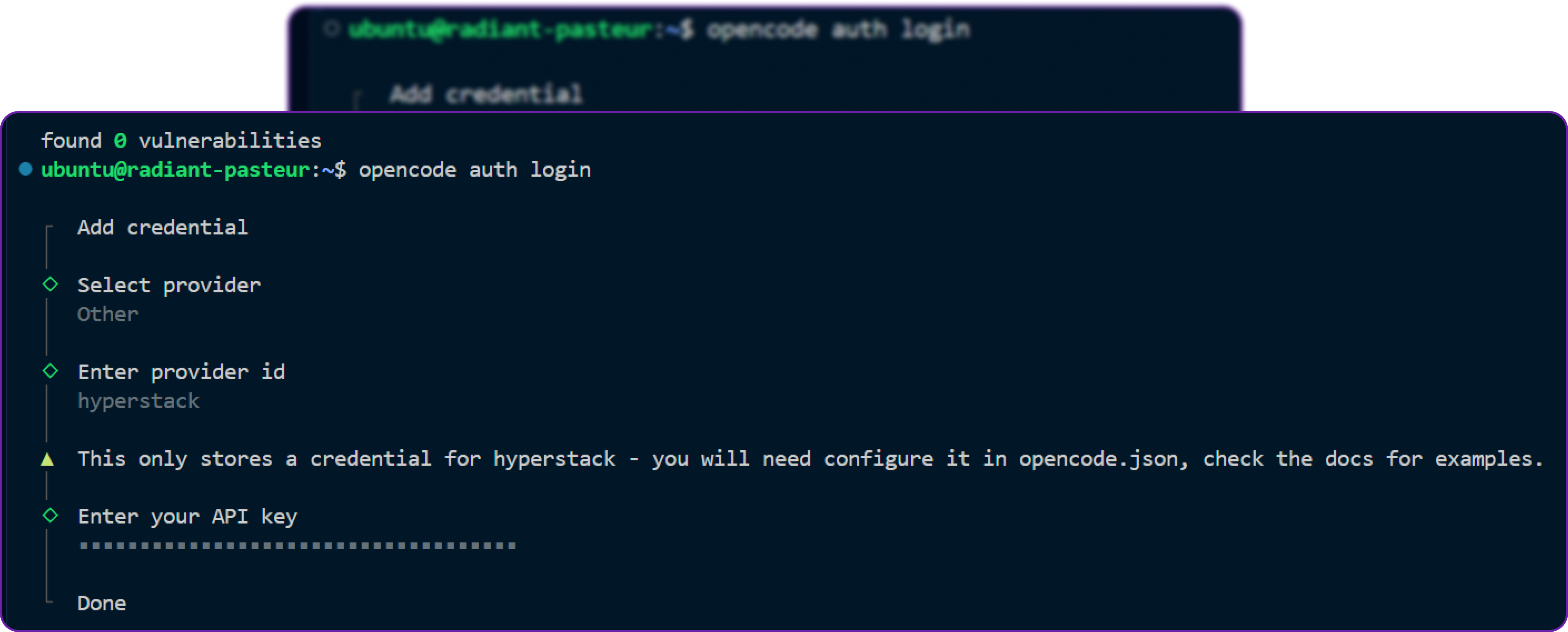

When we select "Other" option, it will prompt you to enter your custom LLM/agent API provider.

hyperstack

This ID will help OpenCode identify Hyperstack as the authentication provider and make it distinct from other providers.

Next, provide the API Key you copied from Hyperstack AI Studio.

Once entered, OpenCode will authenticate the connection and securely store the API key for future use.

Now that authentication is set up, we can move on to configuring the connection details.

Step 5: Create Configuration File for Hyperstack

Now, create a configuration file in your project’s root directory named opencode.json.

This file is the most crucial part of the setup, as it tells OpenCode how to connect to Hyperstack and which model to use. Previously we only mentioned the model name, but now we will provide the full configuration.

{

"$schema": "https://opencode.ai/config.json",

"provider": {

"hyperstack": {

"npm": "@ai-sdk/openai-compatible",

"name": "Hyperstack AI",

"options": {

"baseURL": "https://console.hyperstack.cloud/ai/api/v1",

"apiKey": "{env:HYPERSTACK_API_KEY}"

},

"models": {

"meta-llama/Llama-3.1-8B-Instruct": {

"name": "openai/gpt-oss-120b"

}

}

}

}

}

Explanation:

- The

baseURLpoints to Hyperstack’s API endpoint. - The

apiKeyreferences an environment variable for security. - The

modelssection maps OpenCode’s interface to the specific Hyperstack model you wish to use.

You can view all available models in the Hyperstack model catalog.

We are using openai/gpt-oss-120b as an example here, but you can replace it with any other model available on Hyperstack AI Studio.

Step 6: Set Environment Variable

We need to set the environment variable for the Hyperstack API key. and for that, you can use the following command in your terminal:

export HYPERSTACK_API_KEY="your_hyperstack_api_key_here"

Before running the above command, make sure to replace your_hyperstack_api_key_here with the actual API key you generated from Hyperstack.

To verify that the environment variable is set correctly, you can run:

# It will prints your hyperstack api key

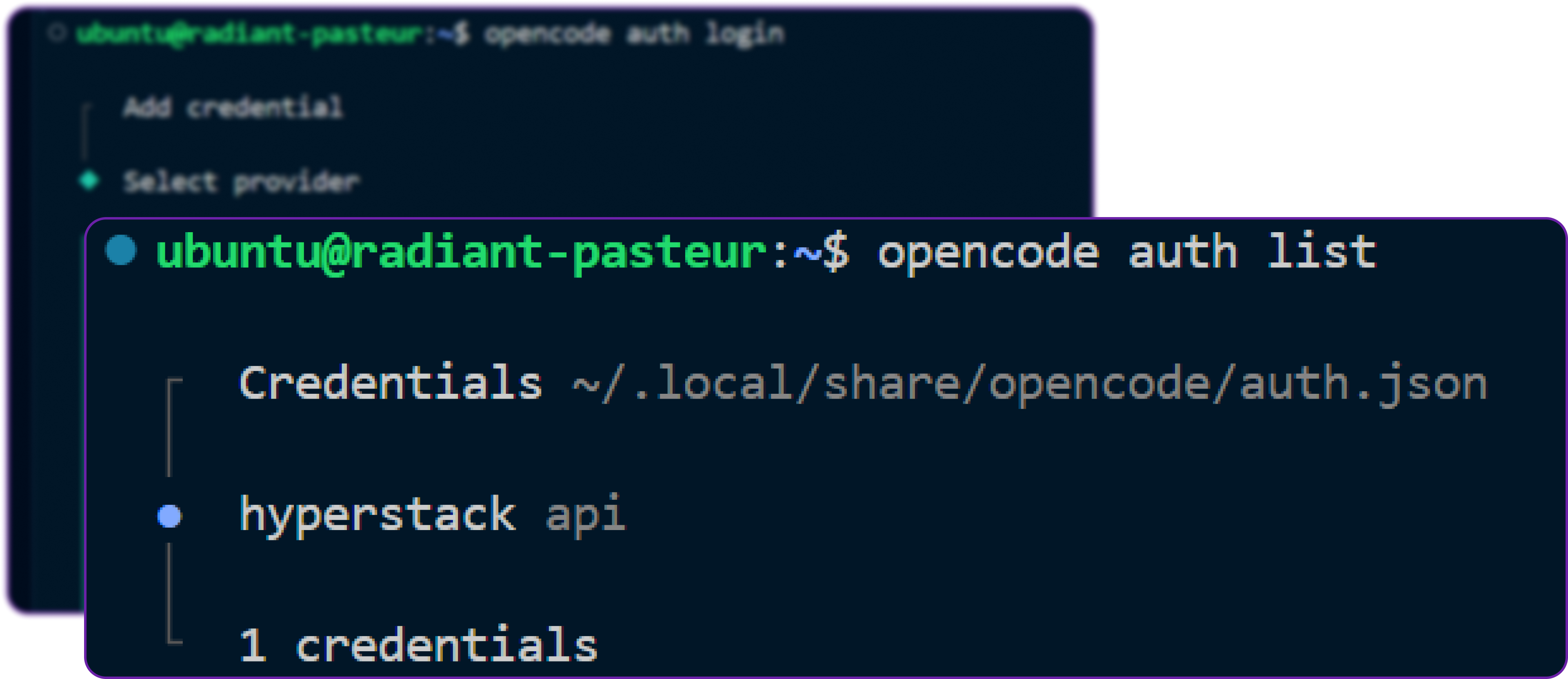

echo $HYPERSTACK_API_KEYStep 7: Verify Authentication

To ensure everything is connected correctly, we can list the configured authentication providers using:

# List all configured authentication providers

opencode auth list

You can see that it shows Hyperstack as an auth provider which means the authentication is set up correctly.

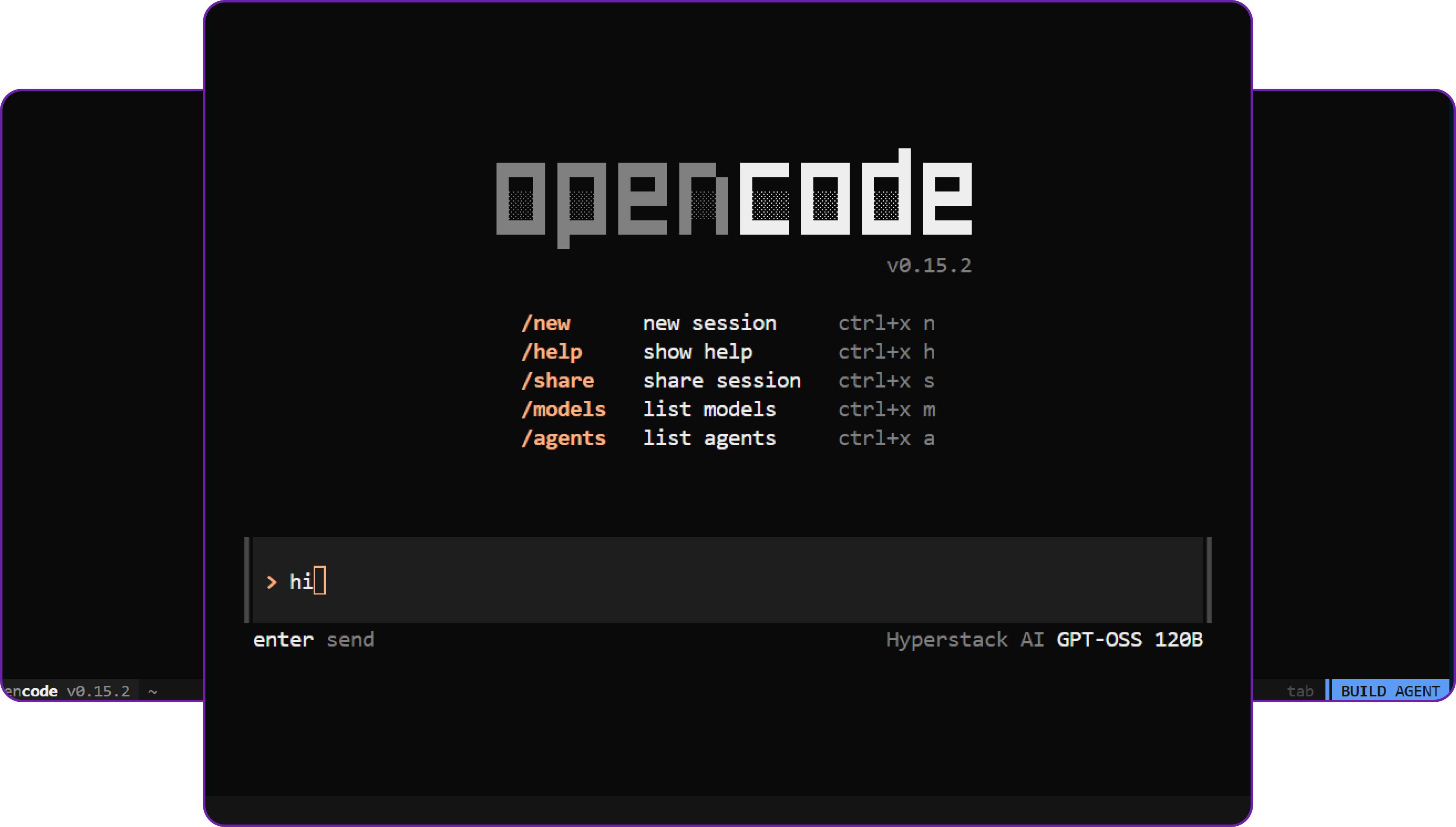

Step 8: Start OpenCode Studio

Now that configuration is complete, we can start OpenCode using the command:

# Launch the OpenCode Studio environment

opencode

This will launch an interactive terminal interface.

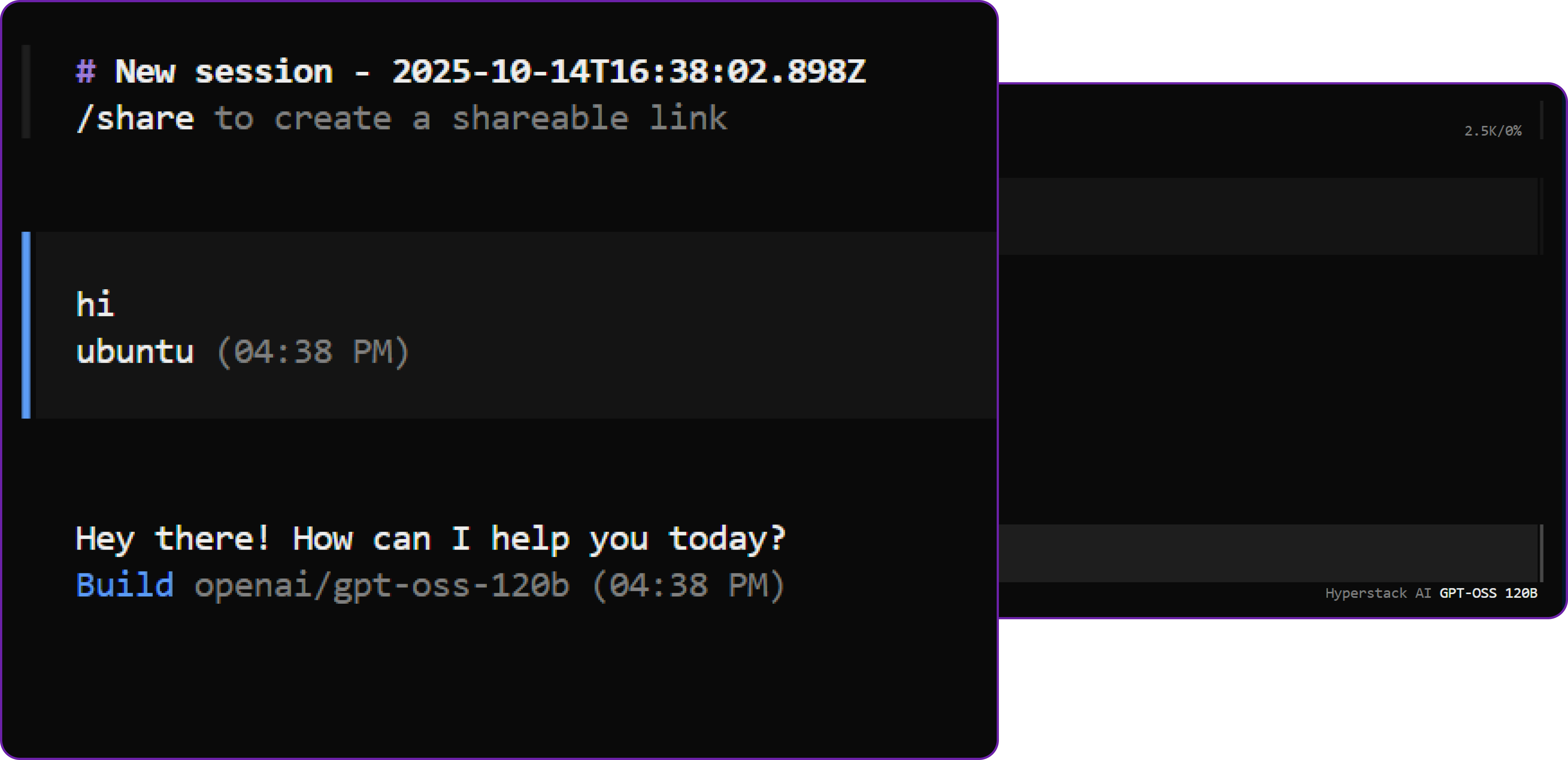

Try sending a simple message to confirm the connection:

hi

You should receive a valid response from the Hyperstack model that confirms your setup is working properly.

Great! You can see that it gives a response from the Hyperstack AI Studio model, which means everything is working fine.

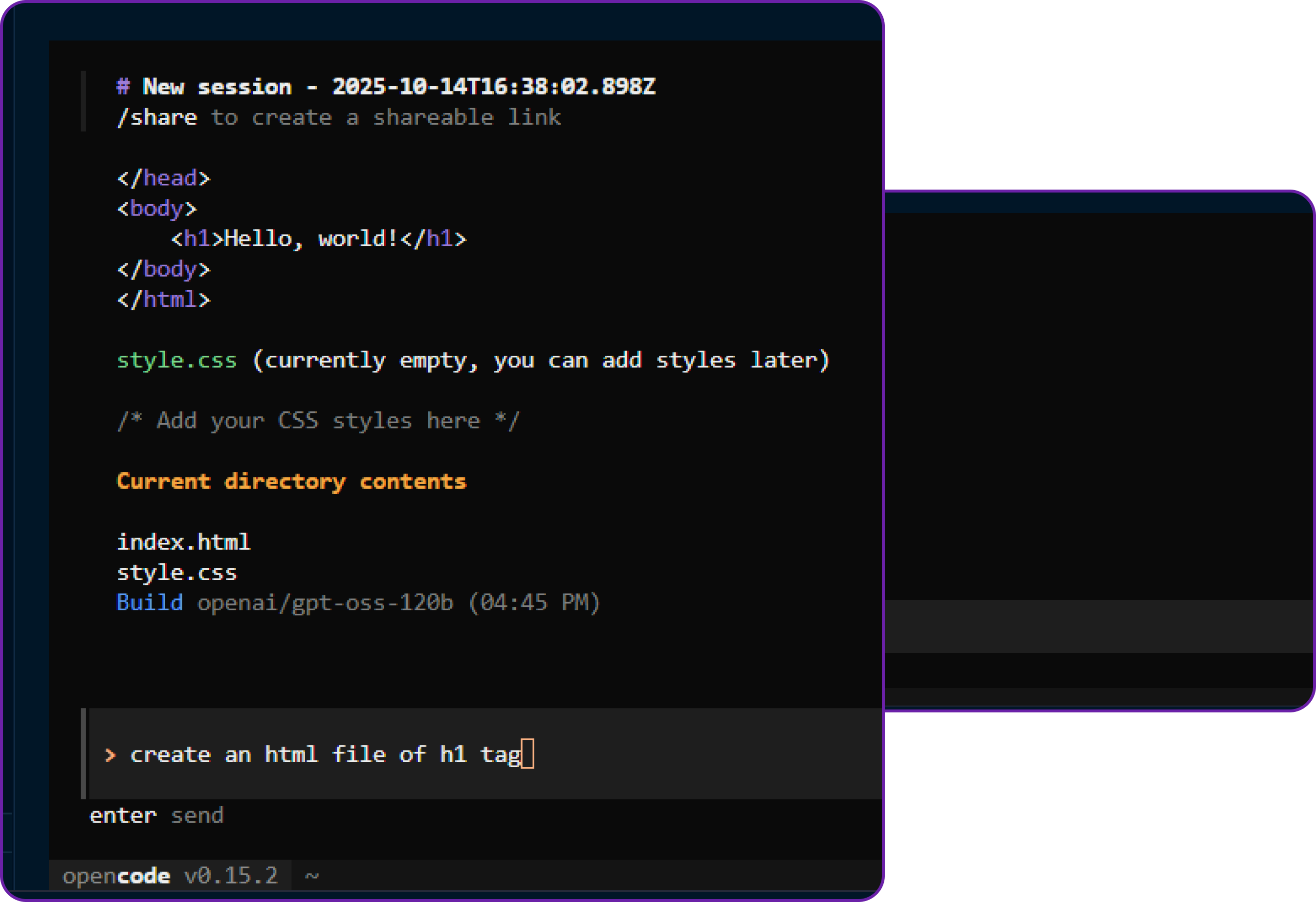

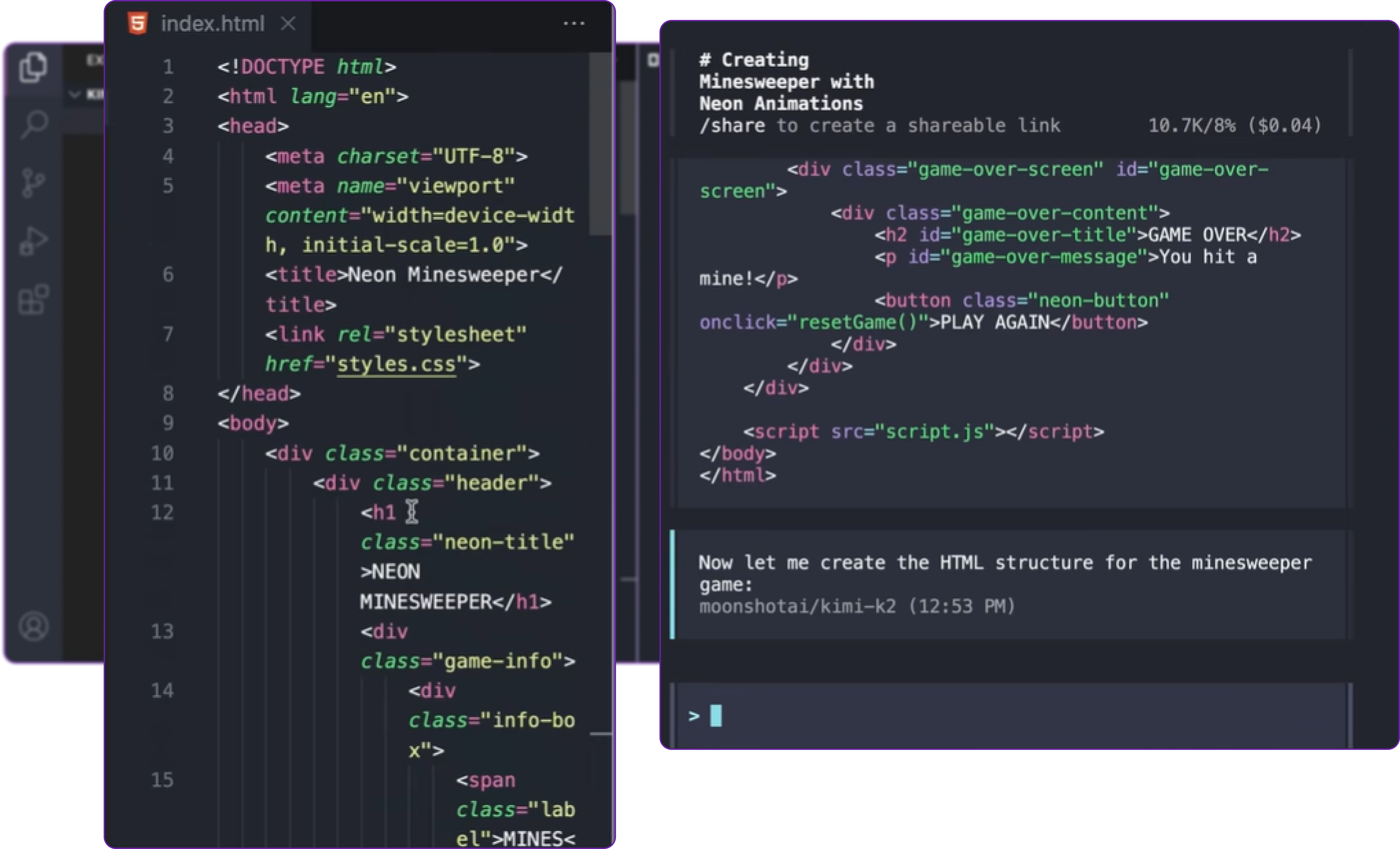

Step 9: Test Code Generation

To further verify functionality, let’s try a practical example.

We are going to ask the model to generate a simple HTML page.

create an HTML file of h1 tag

It will start generating the code for the HTML page. a very simple html page will be generated.

<!DOCTYPE html>

<html>

<head>

</head>

<body>

<h1>Hello, world!</h1>

</body>

</html>

This confirms that Hyperstack model is successfully responding to OpenCode and generating outputs as expected.

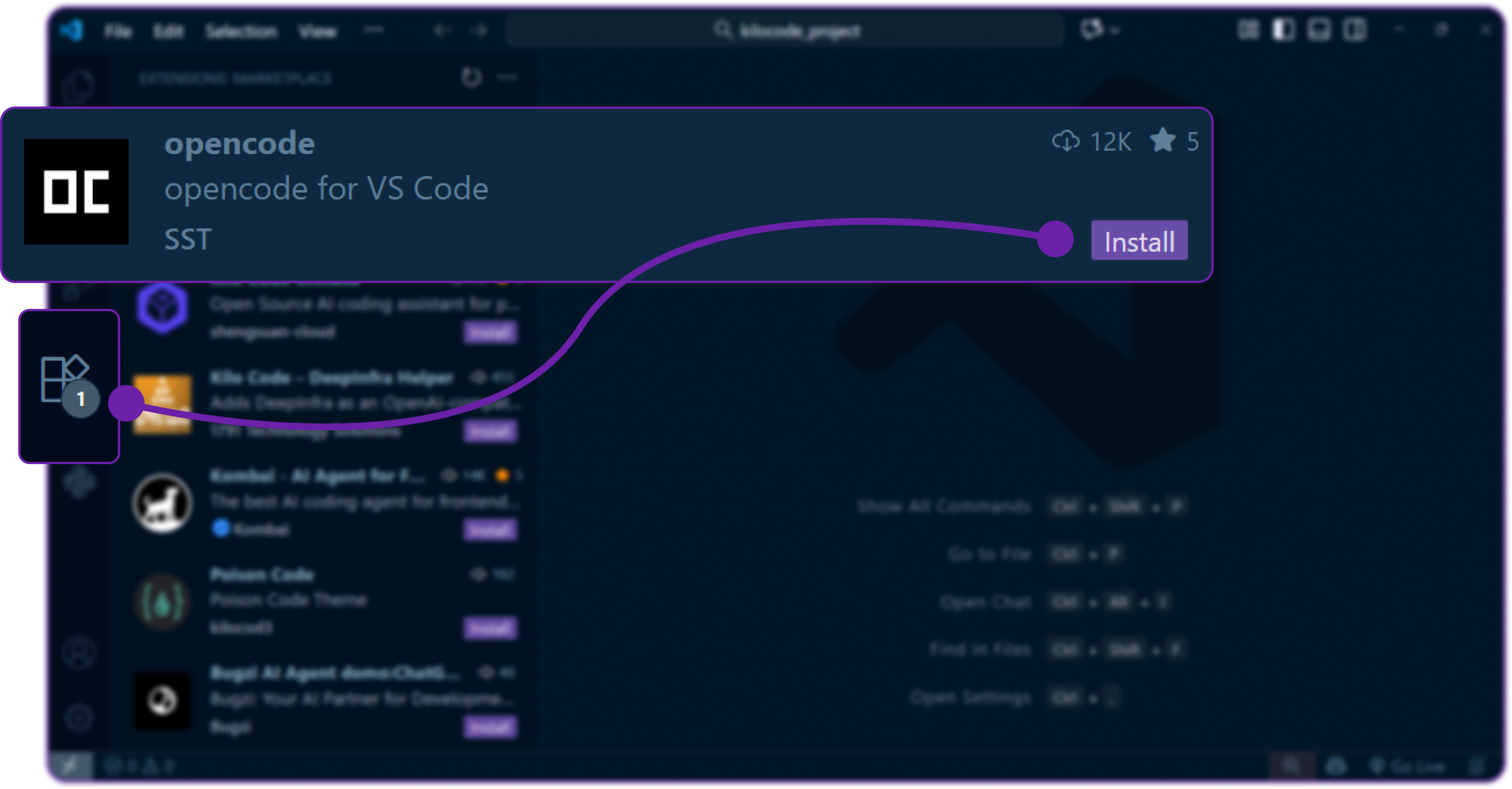

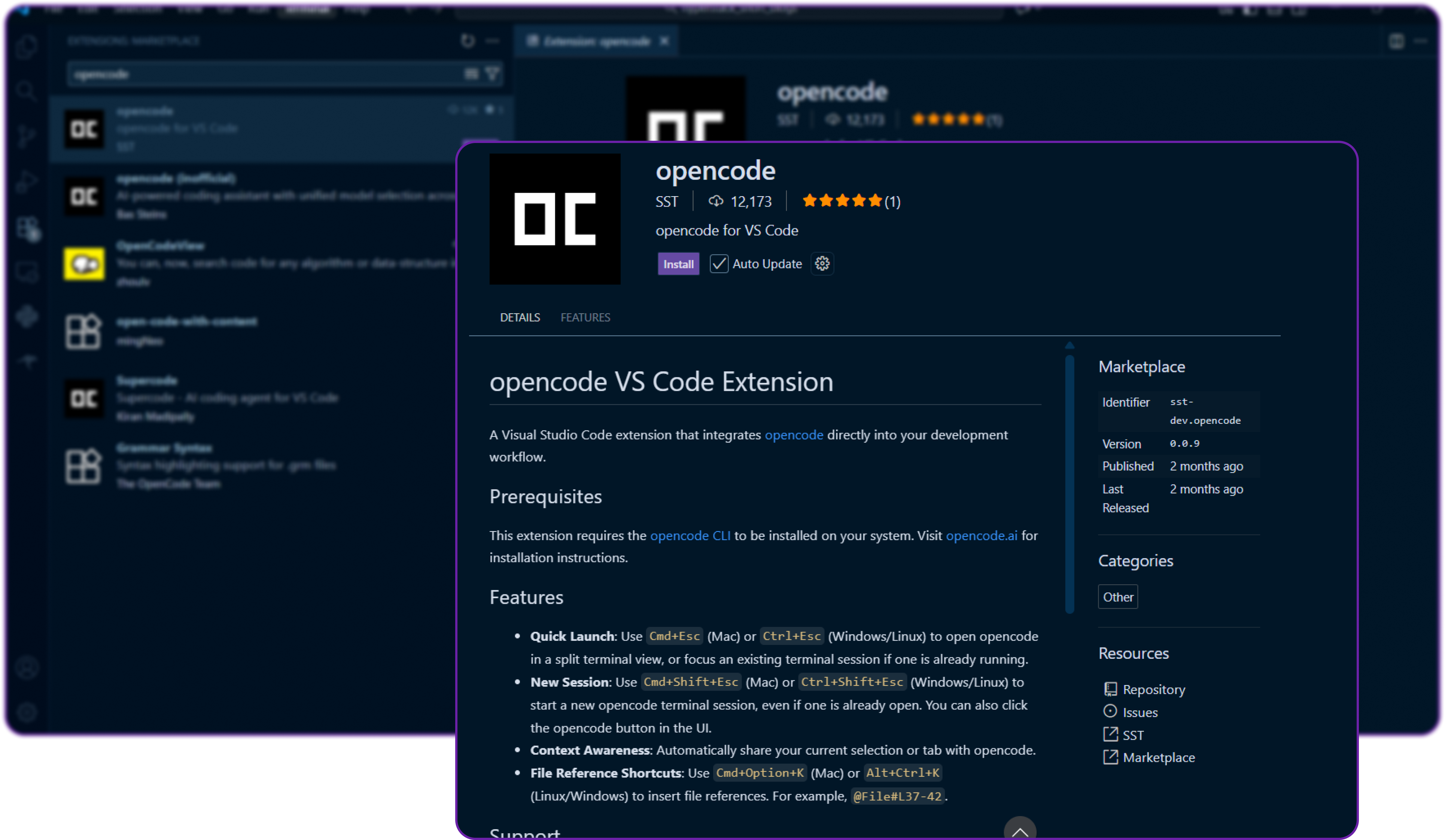

Step 10: Purpose of VSCode Extension

To actually implement the agent steps, let's download the OpenCode extension first.

Click on the install button to start installing the Open Code extension.

Once you installed the extension, we can simply pull it's agent.

We are creating a simple index.html file by passing a prompt in our open code agent that we installed previously, it first design a plan and then execute it and paste it's generated code inside that file.

Monitoring the Usage

Hyperstack provides built-in monitoring tools to track usage, and costs of your API calls. Go to the Usage Dashboard in Hyperstack to see your consumption metrics.

We can monitor API usage along with tokens used, and also according to model chat and tool calling feature also.

Next Steps

Now that integration is complete, you can start using Hyperstack models within OpenCode for advanced workflows.

Some ideas for further exploration:

- Fine-tune models in Hyperstack for specific coding domains or business tasks.

- Benchmark and evaluate model performance using Hyperstack’s evaluation framework.

- Explore multiple model sizes for cost-performance optimization.

- Build and test multi-agent systems within OpenCode, powered by Hyperstack inference.

You can monitor usage in both Hyperstack Console and OpenCode dashboards to track tokens, latency, and performance metrics.

Conclusion

By integrating Hyperstack AI Studio with OpenCode, you combine the strengths of both platforms:

- Hyperstack provides the scalable, secure, and customizable backend for model inference and fine-tuning.

- OpenCode delivers the user-friendly, developer-oriented frontend for prompt execution and agent development.

Together, they create a complete end-to-end AI workflow — from fine-tuning to inference enabling developers to build powerful, intelligent systems efficiently and securely.

FAQ

1. Which Hyperstack models are supported?

OpenCode supports all models from Hyperstack AI Studio that follow the OpenAI-compatible API schema, including both open-source and fine-tuned models.

2. Can I use fine-tuned models?

Yes, you can fine-tune models on your own datasets and integrate them into OpenCode by copying the model’s endpoint and API key.

3. How can I test my setup?

Run opencode in your terminal and send a test message like "hi." A valid response confirms your setup is working.

4. Is this integration secure?

Yes, communication is encrypted via HTTPS, and it's recommended to store API keys in environment variables for added security.

5. Can I switch between multiple providers?

Yes, OpenCode supports multiple providers like OpenAI, Anthropic, and Hyperstack, and you can easily switch between them.

6. Where can I monitor usage and performance?

You can monitor usage via the Hyperstack Console and the OpenCode Dashboard at opencode.ai/dashboard.

Subscribe to Hyperstack!

Enter your email to get updates to your inbox every week

Get Started

Ready to build the next big thing in AI?

.png)