TABLE OF CONTENTS

NVIDIA H100 SXM On-Demand

What is n8n?

n8n is an open-source, fair-code workflow automation tool that helps you connect applications, services and APIs through a visual, node-based interface. It offers a simple drag-and-drop automation with the flexibility of JavaScript for advanced customisation.

What is the Purpose of n8n?

Using a visual, node-based interface, n8n enables you to build workflows that move data between systems, schedule tasks and respond to real-time events. With support for AI-powered features, n8n goes beyond simple automation. This makes it easier to design intelligent workflows that combine no-code convenience with advanced flexibility.

What is n8n Best For?

n8n is ideal for the following tasks:

-

Streamlining repetitive tasks across multiple apps and platforms.

-

Designing advanced data pipelines to collect, process, and route information seamlessly.

-

Building tailored integrations that connect applications beyond standard connectors.

-

Powering intelligent workflows by integrating large language models (LLMs) and AI-driven automation

Why Should You Use n8n?

n8n is an ideal choice for seamless integration and workflow management:

No Complex Code Required

Instead of wasting time with complex code, you can map out automations in a simple drag-and-drop editor. Add nodes, set conditions and watch the flow run step by step.

Vast Integrations

From Google Sheets and Slack to GitHub, AWS, Supabse and Trello, n8n connects with many popular tools. This makes it possible to link together the apps your team already uses and streamline everything from messaging to data pipelines.

Code Where It Counts

For times when drag-and-drop is not enough, you can inject JavaScript directly into your workflows. This gives you the freedom to clean data, apply business rules or call APIs in a way that matches your unique requirements.

Trigger on Any Event

Workflows can fire automatically in response to real-time events: an incoming webhook, a scheduled cron job, an update in a connected app or a manual action. That means your automations always stay in sync with what’s happening.

Flexible and Extendable

As your needs grow, n8n grows with you. You can build your own custom nodes, reuse parts of existing workflows or install community plugins to expand functionality without starting from scratch.

Host It Your Way

Run n8n on Docker or directly on your own server. Hosting it yourself means you decide how data is stored, how secure the system is and how it scales.

How to Integrate n8n with Self-hosted LLM on Hyperstack

To run n8n with a local Llama model on Hyperstack, you need to follow these steps:

Step 1: Accessing Hyperstack

- Go to the Hyperstack and log in to your account. If you're new to Hyperstack, you'll need to create an account and set up your billing information. Check our documentation to get started with Hyperstack.

- Once logged in, you'll be greeted by the Hyperstack dashboard, which provides an overview of your resources and deployments.

Step 2: Access the Model

-

To use the Llama 3.3 70B model, you need to:

-

Request access to the gated model here: https://huggingface.co/meta-llama/Llama-3.3-70B-Instruct

-

Create a HuggingFace token to access the gated model, see more info here.

-

Replace line 12 with their own HuggingFace token (see more details here)

-

Step 3: Create Your VM

Deploy a VM equipped with 2×A100-80G-PCIe GPUs, running Ubuntu 24.04 with the R570 CUDA 12.8 toolkit and Docker pre-installed.

Add Firewall Rules

- Open port "5678" to allow incoming traffic on this port.

Select a keypair

- Select one of the keypairs in your account. Don't have a keypair yet? See our Getting Started tutorial for creating one.

Network Configuration

- Ensure you assign a Public IP to your Virtual machine [See the attached screenshot].

- This allows you to access your VM from the internet, which is crucial for remote management and API access.

Enable SSH Access

- Make sure to enable an SSH connection.

- You'll need this to securely connect and manage your VM.

Connect to your VM with SSH

- Once your VM is created, connect to it using SSH.

- Open a terminal on your local machine.

- Use the command ssh -i [path_to_ssh_key] [os_username]@[vm_ip_address] (e.g: ssh -i /users/username/downloads/keypair_hyperstack ubuntu@0.0.0.0.0)

- Replace username and ip_address with the details provided by Hyperstack.

Step 4: Run vLLM with Llama 3.3.70B.

Use the following command to run vLLM with Llama 3.3.70B:

docker run -d --gpus all \

-v /ephemeral/.cache/huggingface:/root/.cache/huggingface \

-v /home/ubuntu/vllm:/vllm_repo \

-p 8000:8000 \

--ipc=host \

--restart always" \

--env HF_TOKEN=<your token here> \

vllm/vllm-openai:latest \

--tensor-parallel-size 2 \

--model "meta-llama/Llama-3.3-70B-Instruct" \

--max_model_len 23000 Remember to replace the <your token here> with your own token from before!

Step 5: Run n8n on the Same VM

Use the following command to run n8n on the same VM:

docker run -d --name n8n \

--restart always \

-p 5678:5678 \

-v /home/ubuntu/n8n_data:/home/node/.n8n \

-e N8N_SECURE_COOKIE=false \

docker.n8n.io/n8nio/n8n Step 6: Sign Up for n8n

Go to http://localhost:8000/v1 and sign up for n8n using your email and details. (Screenshot attached)

.png?width=1714&height=915&name=image%20(9).png)

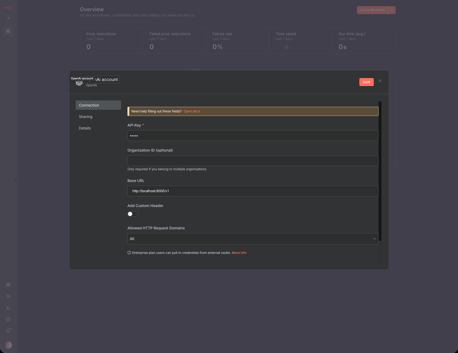

Step 7: Add OpenAI Credential in n8n

In n8n, go to Credentials (on the left sidebar) → Add New Credential → search for “OpenAI” →

-

Set API Key to

"none" -

Set Base URL to http://localhost:8000/v1

Then click Save.

(If it fails, wait a few minutes for vLLM to finish downloading and setting up the Llama 3 model.)

Step 8: Create Workflow Using Local Llama 3.3 70B

Create a new Workflow → click the + button in the centre → search for “OpenAI Chat Model” →

.png?width=458&height=353&name=image%20(8).png)

-

Under Credentials, select “OpenAI account”

-

Under Model, choose “meta-llama/Llama-3.3-70B-Instruct”

Then return to your workflow.

Step 9: Test the Workflow

Click “Open Chat” below to interact with the workflow.

(Note: The first run may take a few seconds to load and initialise.)

Now, you can create more nodes and use the same model to create complex and powerful workflows!

-1.png?width=1717&height=1326&name=image%20(11)-1.png)

New to Hyperstack? Get Started Today!

Sign up on Hyperstack today to access powerful GPUs and developer-friendly tools on our high-performance infrastructure.

FAQs

What is n8n?

n8n is an open-source workflow automation tool that lets you connect apps, services, and APIs to automate tasks. It uses a visual, node-based interface, allowing both no-code and low-code automation for businesses and developers.

Is n8n free to use?

Yes, the core version of n8n is free and open-source under fair-code licensing. You can self-host it on your server at no cost, with full control over your workflows and data.

Can I use n8n without coding?

Absolutely. n8n’s drag-and-drop workflow builder allows you to create automations without writing code. For advanced users, you can also insert JavaScript to add custom logic to workflows.

What apps and services can I integrate with n8n?

n8n supports over 300 integrations, including Slack, Google Sheets, Notion, GitHub, MySQL, AWS, Trello and many more. This makes it easy to automate tasks across messaging, databases, cloud platforms, and developer tools.

Can n8n be used with AI and LLM workflows?

Yes. n8n can integrate AI models and large language models (LLM) chains, enabling AI-powered automations, intelligent decision-making and smart data processing directly within your workflows.

Subscribe to Hyperstack!

Enter your email to get updates to your inbox every week

Get Started

Ready to build the next big thing in AI?