TABLE OF CONTENTS

NVIDIA H100 SXM On-Demand

In my previous article, I wrote about the latest open AI model i.e. LLaMA 3 being on par with the best proprietary models available today. While Meta decided to go open-source with LLaMA 3 to democratise innovation for the broader research community and developers, Microsoft has introduced Phi-3, a small language model (SLM) that offers impressive capabilities while being more efficient and accessible than large language models (LLMs).

The goal behind Phi-3 is to extend the reach of AI technologies to resource-constrained environments, including on-device and offline inference scenarios. And guess what? Companies are already leveraging Phi-3 model to build solutions for such environments, particularly in areas where internet connectivity might be limited or unavailable like Agriculture. Powerful small models like Phi-3, combined with Microsoft's copilot templates, can be made available to farmers at the point of need, providing the additional benefit of running at reduced cost and making AI technologies more accessible to the common people.

ITC, a leading Indian business is leveraging Phi-3 SLM as part of their continued collaboration with Microsoft on the Krishi Mitra copilot. This farmer-facing app reaches over a million farmers. The aim is to improve efficiency while maintaining the accuracy of a large language model by using fine-tuned versions of the efficient Phi-3.

About Microsoft Phi-3 Model

The Microsoft Phi-3-mini is the latest most capable small 3.8B language model, available on Microsoft Azure AI Studio, Hugging Face, and Ollama. This versatile small language model comes in two context-length variants: 4K and 128K tokens, making it the first model in its class to support a context window of up to 128K tokens with minimal impact on quality. One of the standout features of Phi-3-mini is its instruction-tuned nature, meaning that it is trained to follow different types of instructions reflecting how people normally communicate. This ensures the model is ready to use out-of-the-box for a better developer and user experience. Phi-3-mini has been optimised for ONNX Runtime, with support for Windows DirectML and cross-platform support across GPUs, CPUs, and even mobile hardware.

Microsoft’s Smallest AI Model

The decision to develop Phi-3 as a family of small language models could be derived from Microsoft's commitment to addressing the growing need for different-sized models across the quality-cost curve for various tasks. SLMs like Phi-3 offer several advantages making it “The One” for common people and small organisations.

- Their smaller size allows for easier and more affordable fine-tuning or customisation, enabling businesses and developers to tailor the models to their needs.

- The lower computational requirements of small language models result in reduced costs and improved latency, making them approachable for cost-constrained use cases or scenarios where fast response times are critical.

- The longer context window supported by Phi-3-mini, up to 128K tokens, enables reasoning over large text content, such as documents, web pages, and code. This creates new possibilities for analytical tasks and data processing.

“Some customers may only need small models, some will need big models and many are going to want to combine both in a variety of ways”

-Luis Vargas, Vice President of AI at Microsoft

Technical Specifications of Microsoft Phi-3 Model

Here are the technical specifications of Microsoft's Phi-3 SLM:

- Tokenization: Tiktoken tokenizer for better multilingual tokenization, vocabulary size of 100,352

- Architecture: Standard decoder, 32 layers, hidden size of 4096

- Attention: Grouped-query attention (4 queries share 1 key), alternating dense and novel block sparse attention layers

- Default context length: 8K tokens

- Training data: Additional 10% multilingual data

- Model size: 7 billion parameters

Capabilities of Microsoft Phi-3 Model

Microsoft Phi-3 model comes with an impressive range of capabilities. This makes it an attractive option for various applications. Some of its key capabilities include:

- Natural Language Processing (NLP): Phi-3 models can be employed for tasks such as text classification, sentiment analysis, named entity recognition, and text summarisation.

- Conversational AI: With their strong language understanding capabilities, Phi-3 models can be leveraged to build chatbots, virtual assistants, and other conversational AI applications.

- Code Generation: Phi-3 models can be used for code completion, code generation and analysis tasks, making them valuable tools for software developers and programmers.

- Question answering: The reasoning and logic capabilities of Phi-3 models make them well-suited for question-answering tasks, enabling the development of intelligent information retrieval systems.

- Analytical Tasks: Phi-3 models, particularly Phi-3-mini, demonstrate strong reasoning and logic capabilities, making them excellent candidates for analytical tasks involving large text content, such as documents, web pages, and code.

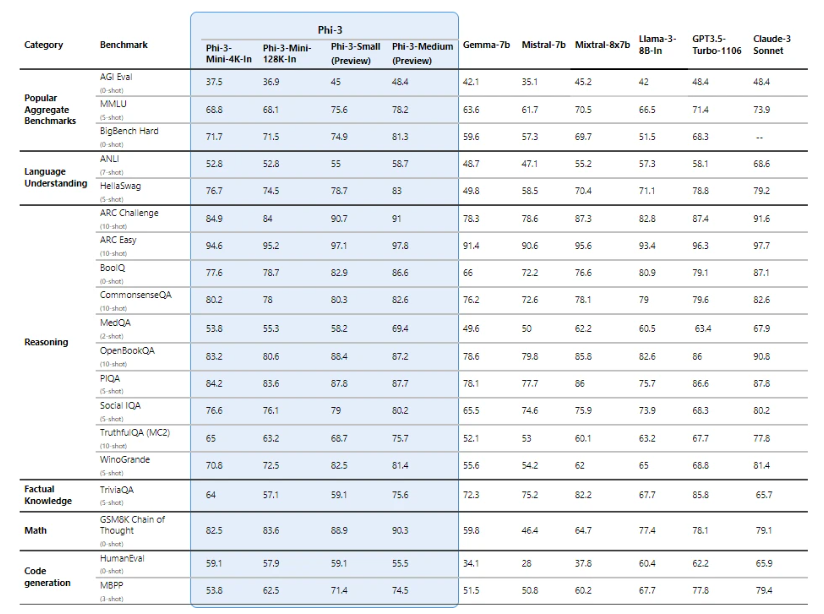

Performance Benchmarks of Microsoft Phi-3 Model

The capabilities of the Phi-3 models significantly outperform language models of the same and larger sizes on key benchmarks. To give you an idea, Phi-3-mini does better than models twice its size, while Phi-3-small (7B) and Phi-3-medium (14B) outperform much larger models, including GPT-3.5T. While Phi-3 models excel in various areas, it is worth mentioning that Phi-3 may not perform well on factual knowledge benchmarks, such as TriviaQA because a smaller model size has less capacity to retain facts.

Click here to check the image source

If we look at the performance benchmarks for Microsoft's Phi-3 model family above, it compares small language models across various tasks to other prominent models like Gamma, Mistral, LLaMA, GPT-3 and Claude.

- The Phi-3 models (Phi-3-Mini 4K-In, Phi-3-Mini 128K-In, Phi-3-Small, and Phi-3-Medium) demonstrate impressive performance on popular benchmarks like ANLU, HellaSwag, ADE Challenge, and ADE Easy, often outperforming larger models like Gamma 7B, Mistral 7B, and Mistral 8.7B.

- For reasoning tasks like CommonsenseQA, OpenBookQA, and PiQA, the Phi-3 models achieve competitive scores, showcasing their strong reasoning and logic capabilities.

- On benchmarks related to factual knowledge, such as TriviaQA, the Phi-3 models perform relatively lower compared to larger models, likely due to their smaller size and limited capacity to retain factual information.

- In the math category, specifically the GSM8K Chain of Thought benchmark, the Phi-3 models demonstrate impressive mathematical reasoning abilities, surpassing larger models like Gamma 7B and Mistral 7B.

- For code generation tasks like HumanEval and APPS, the Phi-3 models exhibit strong performance, highlighting their potential for applications in software development and programming domains.

It is important to note that all reported benchmark numbers are produced with the same pipeline to ensure comparability. However, these numbers may differ from other published numbers due to slight differences in evaluation methodology.

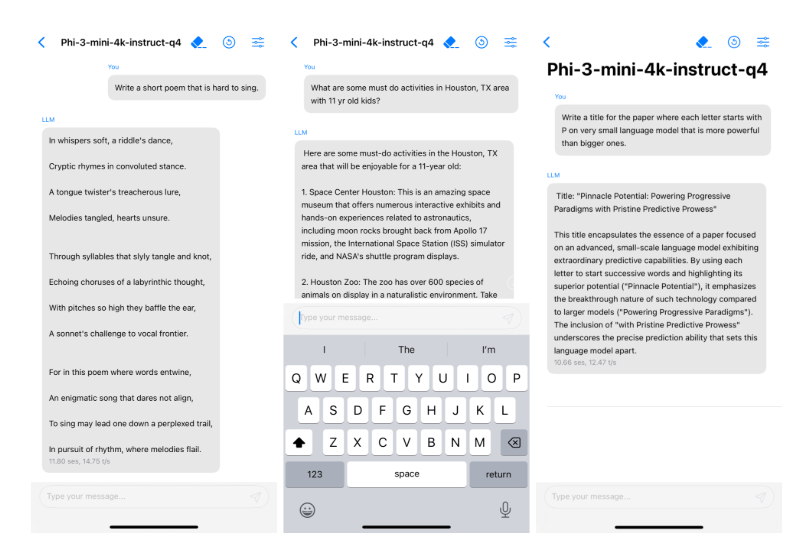

Running Microsoft Phi-3 Model on Mobile

The Microsoft Phi-3 mini can be quantised to 4 bits so that it only consumes 1.8GB of memory. The model was tested by deploying phi-3-mini on iPhone 14 with an A16 Bionic chip running natively on-device and fully offline achieving more than 12 tokens per second. See the image below for reference.

Image source: Microsoft's Phi-3 Technical Report

Safety AI Model Design of Microsoft Phi-3 Model

The development of the Phi-3 models followed a "safety-first" approach, adhering to the Microsoft Responsible AI Standard – a comprehensive set of company-wide requirements grounded in six core principles:

- Accountability

- Transparency

- Fairness

- Reliability and safety

- Privacy and security

- Inclusiveness

The Phi-3 model underwent extensive safety measures and evaluations to ensure alignment with these principles throughout their development cycle. This included meticulous safety measurements, thorough evaluation processes, adversarial "red-teaming" exercises, sensitive use case reviews, and strict adherence to security best practices. This comprehensive approach aimed to mitigate potential risks and biases while upholding the highest transparency, fairness, and privacy protection standards.

Applications of Microsoft Phi-3 Model

Microsoft Phi-3 SLM's versatility and efficiency make it an attractive option for various applications and use cases, including:

- Virtual assistants and chatbots

- Content creation and writing assistance

- Sentiment analysis and social media monitoring

- Code completion and programming assistance

- Customer support and helpdesk automation

Potential Impact of Microsoft Phi-3 Model

Microsoft Phi-3 model has the potential to transform various industries, including:

- Healthcare: Phi-3's compact size and efficiency make it suitable for medical applications, such as medical chatbots and patient support systems.

- Education: The model's language understanding and generation capabilities make it an attractive option for educational applications, such as language learning and writing assistance.

- Environment: Phi-3's smaller size and reduced carbon footprint contribute to the goal of environmentally sustainable AI.

FAQs

What is Microsoft Phi-3 model?

Microsoft Phi-3 is a family of small language models (SLMs) from Microsoft. Unlike large language models (LLMs), Phi-3 models are designed to be more efficient and require fewer resources. This makes them ideal for tasks on devices with limited processing power or in situations where internet connectivity is unavailable.

What are the benefits of Microsoft Phi-3 model?

Microsoft Phi-3 SLM offers several advantages, including:

- Due to their smaller size, Phi-3 models are cheaper to run and generate responses quicker than LLMs.

- Phi-3's smaller size makes them easier to fine-tune for specific tasks, allowing businesses to tailor them to their needs.

- Phi-3 models excel at tasks requiring reasoning and logic, like question answering and analysing large amounts of text.

What can Microsoft Phi-3 be used for?

Microsoft Phi-3's capabilities make it suitable for various applications, including:

- Virtual assistants and chatbots: Phi-3 can power chatbots and virtual assistants for customer service or information retrieval.

- Content creation: The model can assist with writing tasks like content creation, email composition, and code generation.

- Data analysis: Phi-3 can analyse large amounts of text data for tasks like sentiment analysis and social media monitoring.

How does Microsoft Phi-3 perform compared to other models?

Microsoft Phi-3 models outperform similar-sized models and even some larger models on various benchmarks. They excel in tasks involving reasoning, logic, and code generation. However, due to their smaller size, they may not perform well on factual knowledge tasks.

Is Microsoft Phi-3 safe to use?

Microsoft developed Phi-3 with safety as a priority. The models undergo rigorous testing and adhere to Microsoft's Responsible AI Standard, focusing on accountability, transparency, fairness, and user privacy.

Subscribe to Hyperstack!

Enter your email to get updates to your inbox every week

Get Started

Ready to build the next big thing in AI?