TABLE OF CONTENTS

NVIDIA A100 GPUs On-Demand

What is gpt-oss-20b?

gpt-oss-20b is OpenAI’s newly released open‑weight language model family. This release marks the first open‑weight model from OpenAI since GPT‑2 in 2019.

The model contains approximately 20 billion parameters and uses a mixture‑of‑experts (MoE) architecture. This design allows it to activate only a portion of its parameters for each token, delivering strong performance while keeping compute requirements manageably.

The best part about the gpt-oss-20b is that it can be deployed on hardware with 16 GB of GPU memory. You can easily run the gpt-oss-20b on the NVIDIA H100 GPU.

Key Features of gpt-oss-20b

Here are the key features of gpt-oss-20b mode:

-

Efficient Mixture‑of‑Experts Architecture

gpt-oss-20b uses a 21B‑parameter Transformer with a mixture‑of‑experts (MoE) design, activating only 3.6B parameters per token. This allows the model to deliver high reasoning performance while staying lightweight enough for consumer GPUs with ~16 GB VRAM.

-

Large 128k Context Window

It supports up to 128,000 tokens of context, making it suitable for long‑document understanding, multi‑step reasoning and agentic workflows without frequent truncation or context loss.

-

Strong Reasoning and Tool Use

gpt-oss-20b matches or exceeds OpenAI o3‑mini on benchmarks like AIME (competition mathematics), MMLU and HealthBench. It also shows strong chain‑of‑thought reasoning, few‑shot function calling and tool usage such as Python execution or web search.

-

Optimised for Local and Edge Deployment

Designed for on‑device inference, GPT‑OSS‑20B can run on edge devices, local servers or consumer GPUs for cost‑effective private deployments and rapid iteration without heavy cloud infrastructure.

-

Open‑Weight, Fully Customisable

The model is released under the Apache 2.0 license, so anyone can fine‑tune, modify and deploy gpt-oss-20b freely. It also exposes full chain‑of‑thought outputs and supports structured outputs for integration into agentic and production workflows.

If you’re planning to try the latest gpt-oss-20b model, you’re in the right place. Check out our guide below to get started.

Steps to Deploy gpt-oss-20b

Now, let's walk through the step-by-step process of deploying gpt-oss-20b on Hyperstack.

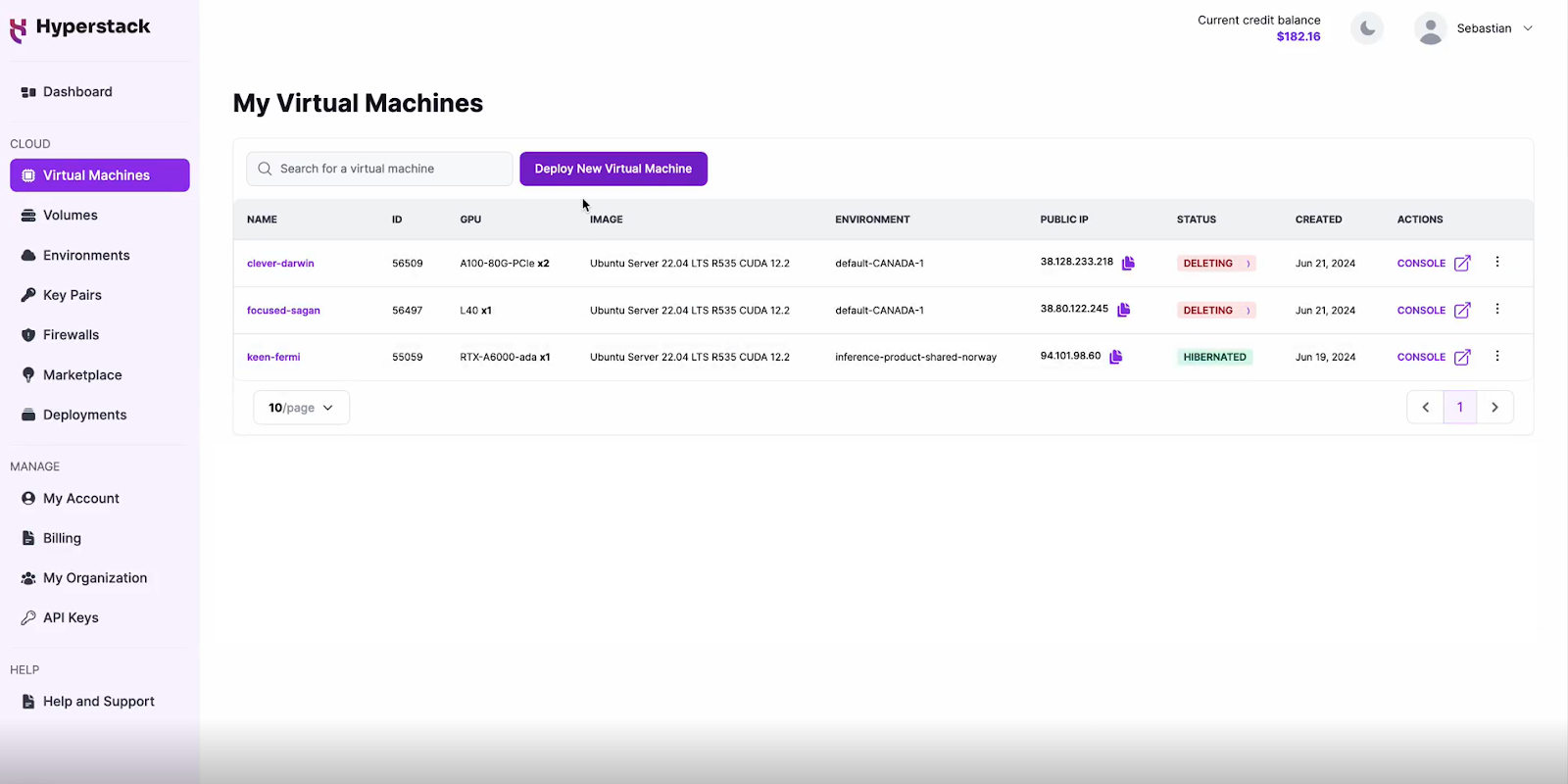

Step 1: Accessing Hyperstack

- Go to the Hyperstack website and log in to your account.

- If you're new to Hyperstack, you'll need to create an account and set up your billing information. Check our documentation to get started with Hyperstack.

- Once logged in, you'll be greeted by the Hyperstack dashboard, which provides an overview of your resources and deployments.

Step 2: Deploying a New Virtual Machine

Initiate Deployment

- Look for the "Deploy New Virtual Machine" button on the dashboard.

- Click it to start the deployment process.

Select Hardware Configuration

- For gpt-oss-20b GPU requirements, go to the hardware options and choose the "1xNVIDIA H100 PCIe" flavour.

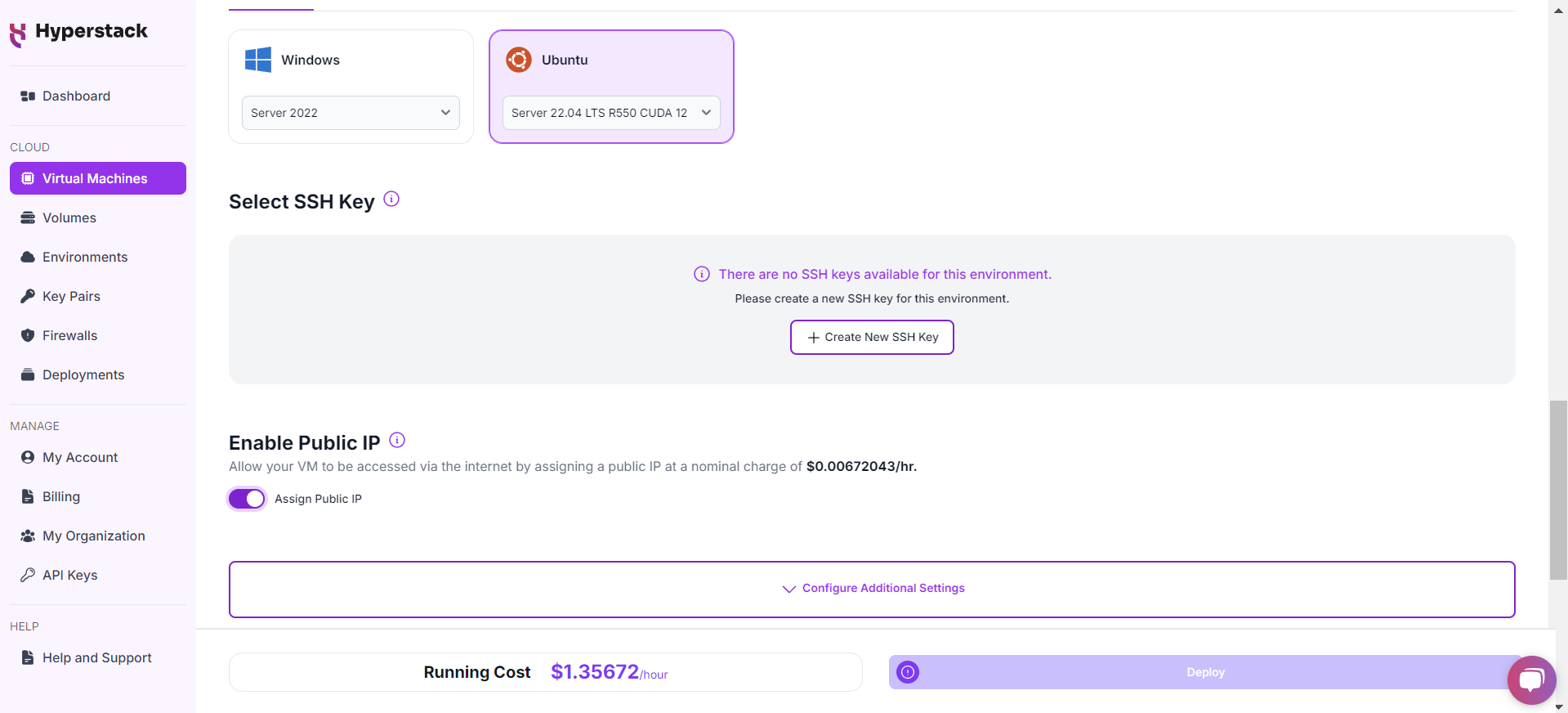

Choose the Operating System

- Select the "Ubuntu Server 24.04 LTS R570 CUDA 12.8 with Docker".

Select a keypair

- Select one of the keypairs in your account. Don't have a keypair yet? See our Getting Started tutorial for creating one.

Network Configuration

- Ensure you assign a Public IP to your Virtual machine [See the attached screenshot].

- This allows you to access your VM from the internet, which is crucial for remote management and API access.

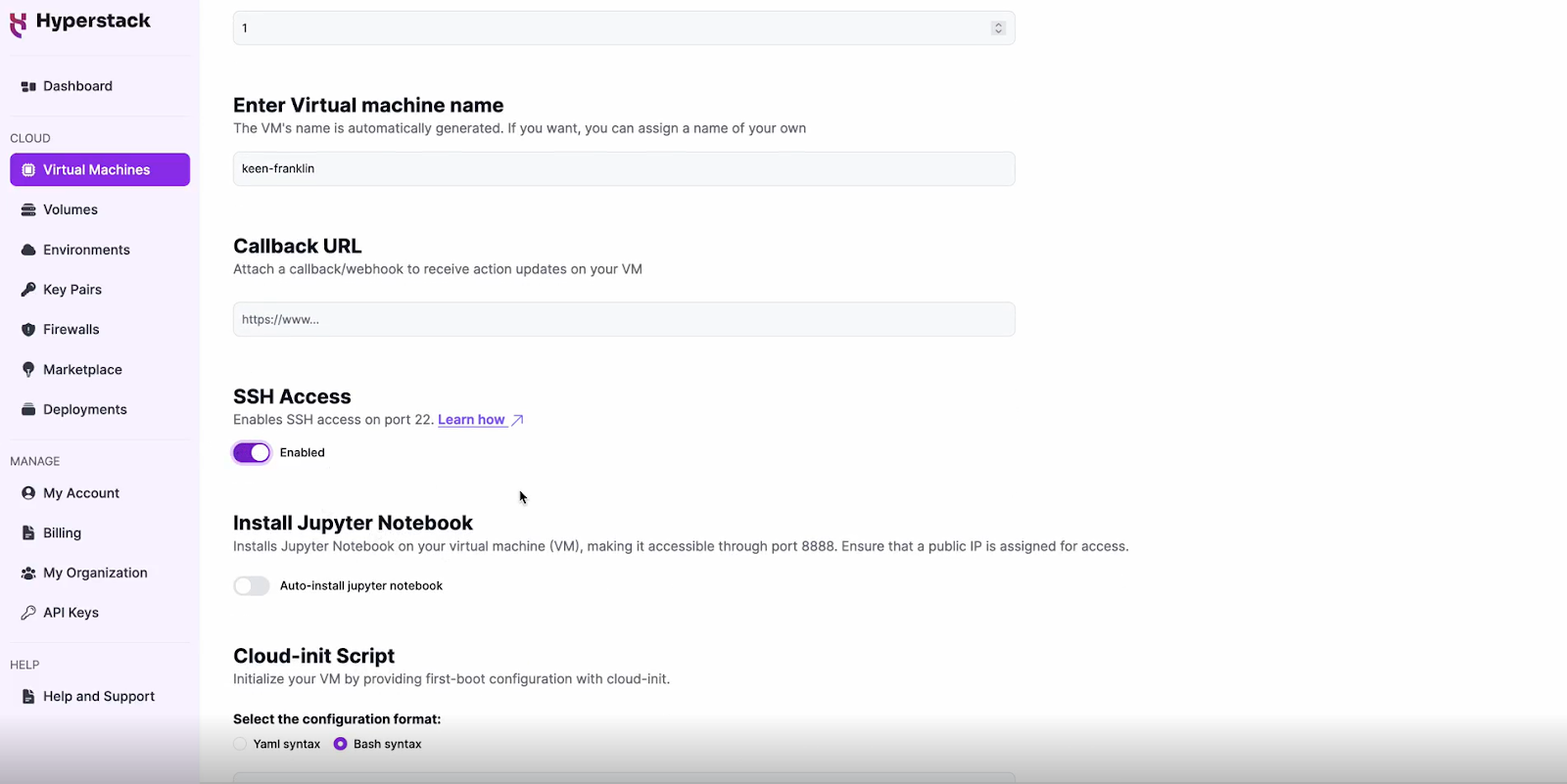

Enable SSH Access

- Make sure to enable an SSH connection.

- You'll need this to securely connect and manage your VM.

- Double-check all your settings.

- Click the "Deploy" button to launch your virtual machine.

Step 3: Accessing Your VM

Once the initialisation is complete, you can access your VM:

Locate SSH Details

- In the Hyperstack dashboard, find your VM's details.

- Look for the public IP address, which you will need to connect to your VM with SSH.

Connect via SSH

- Open a terminal on your local machine.

- Use the command ssh -i [path_to_ssh_key] [os_username]@[vm_ip_address] (e.g: ssh -i /users/username/downloads/keypair_hyperstack ubuntu@0.0.0.0.0)

- Replace username and ip_address with the details provided by Hyperstack.

Step 4: Setting up gpt-oss-20b with Open WebUI

- To set up gpt-oss-20b, SSH into your machine. If you are having trouble connecting with SSH, watch our recent platform tour video (at 4:08) for a demo. Once connected, use the script below to set up gpt-oss-20b with OpenWebUI.

- Execute the command below to launch open-webui on port 3000.

# Create a docker network docker network create ollama-net # Start ollama runtime sudo docker run -d --gpus=all --network ollama-net -p 11434:11434 -v /home/ubuntu/ollama:/root/.ollama --name ollama --restart always ollama/ollama:latest # Pull the model sudo docker exec -it ollama ollama pull gpt-oss:20b # Start open-webui with this runtime sudo docker run -d --network ollama-net -p 3000:8080 -v open-webui:/app/backend/data --name open-webui --restart always -e OLLAMA_BASE_URL=http://ollama:11434 ghcr.io/open-webui/open-webui:main

The above script will download and host gpt-oss-20b. See the model card here: https://huggingface.co/openai/gpt-oss-20b for more information.

Interacting with gpt-oss-20b

-

Open your VM's firewall settings.

-

Allow port 3000 for your IP address (or leave it open to all IPs, though this is less secure and not recommended). For instructions, see here.

-

Visit http://[public-ip]:3000 in your browser. For example: http://198.145.126.7:3000

-

Set up an admin account for OpenWebUI and save your username and password for future logins. See the attached screenshot.

And voila, you can start talking to your self-hosted gpt-oss-20b! See an example below.

When you're finished with your current workload, you can hibernate your VM to avoid incurring unnecessary costs:

- In the Hyperstack dashboard, locate your Virtual machine.

- Look for a "Hibernate" option.

- Click to hibernate the VM, which will stop billing for compute resources while preserving your setup.

Why Deploy gpt-oss-20b on Hyperstack?

Hyperstack is a cloud platform designed to accelerate AI and machine learning workloads. Here's why it's an excellent choice for deploying gpt-oss-20b:

- Availability: Hyperstack provides access to the latest and most powerful GPUs such as the NVIDIA H100 on-demand, specifically designed to handle large language models.

- Ease of Deployment: With pre-configured environments and one-click deployments, setting up complex AI models becomes significantly simpler on our platform.

- Scalability: You can easily scale your resources up or down based on your computational needs.

- Cost-Effectiveness: You pay only for the resources you use with our cost-effective cloud GPU pricing.

- Integration Capabilities: Hyperstack provides easy integration with popular AI frameworks and tools.

FAQs

What is gpt-oss-20b?

gpt-oss-20b is OpenAI’s 21B‑parameter open‑weight language model offering strong reasoning, local deployment and full customisation under Apache 2.0.

What is the context length of gpt-oss-20b?

It supports up to 128,000 tokens, ideal for long‑document analysis, multi‑step reasoning and agentic workflows requiring extended memory.

How does gpt-oss-20b compare to GPT‑OSS‑120B?

gpt-oss-20b is smaller, lighter, and edge‑friendly, while GPT‑OSS‑120B offers higher performance for enterprise‑level reasoning and large‑scale deployments.

Does gpt-oss-20b support chain‑of‑thought reasoning?

Yes, it supports configurable chain‑of‑thought reasoning with low, medium, and high effort modes for faster or deeper analysis.

Is gpt-oss-20b suitable for tool use and function calling?

Absolutely, GPT‑OSS‑20B excels at few‑shot function calling, tool use like Python execution, and structured output for automation workflows.

What license is gpt-oss-20b released under?

It’s released under Apache 2.0, allowing commercial use, modification, redistribution, and fine‑tuning without vendor lock‑in.

What are ideal use cases for gpt-oss-20b?

It’s perfect for local AI applications, long‑document reasoning, coding tasks, private inference, and cost‑effective on‑device deployments.

Subscribe to Hyperstack!

Enter your email to get updates to your inbox every week

Get Started

Ready to build the next big thing in AI?