TABLE OF CONTENTS

NVIDIA H100 SXM On-Demand

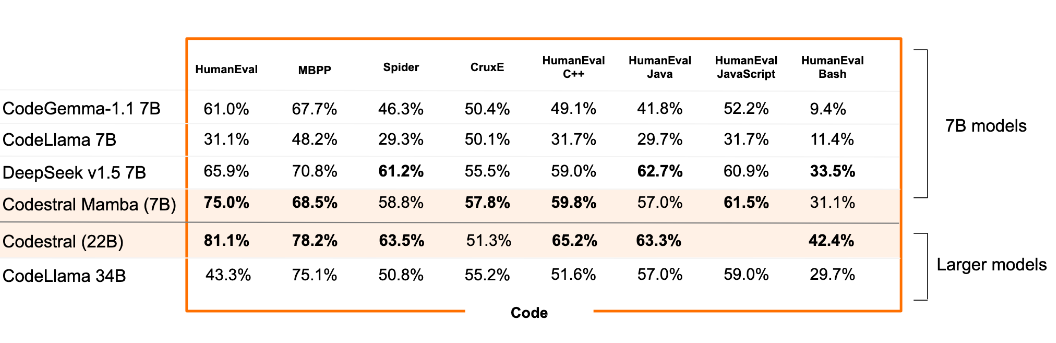

In my previous article, I covered Mistral AI's latest model Codestral Mamba, which set new benchmarks in code generation. As Mistral AI is not slowing down anytime soon we have another groundbreaking model called MathΣtral 7B. This model has already demonstrated remarkable performance across various benchmarks while leading in MATH, grade-level math, AMC and GRE, known for its advanced mathematical reasoning. Continue reading this blog as we explore the features and capabilities of Mathstral and learn how to deploy Mistral AI's math model with Hyperstack.

What is Mathstral?

Mathstral is a groundbreaking 7B parameter language model developed by Mistral AI. The model is specifically designed for mathematical reasoning and scientific discovery. Named after Archimedes on his 2311th anniversary, Mathstral pays respect to the legendary Greek mathematician and physicist.

Key Features of Mathstral

The key features of Mathstral include:

- Built on Mistral 7B: The model leverages the capabilities of Mistral 7B as its foundation, inheriting its efficient architecture while specialising in mathematical tasks.

- Specialisation in STEM subjects: Mathstral is fine-tuned to excel in mathematics, science, technology and engineering tasks. This sets it apart from general-purpose language models.

- 32k context window: This extended context allows the model to handle longer and more complex mathematical problems for advanced problem-solving.

- Released under Apache 2.0 license: The model is available under the Apache 2.0 license for promoting open-source use and collaboration.

- Collaboration with Project Numina: Mathstral is a product of Mistral AI's partnership with academic initiatives.

- Instructed model design: Mathstral is created to follow instructions making it suitable for fine-tuning and adaptation to specific use cases in various mathematical domains.

- Inference-time computation flexibility: The model's performance can be improved with additional computation resources during inference. This allows for scalability in high-stakes applications.

Performance Benchmarks of Mathstral

Mathstral has demonstrated impressive performance across various industry-standard benchmarks for mathematical reasoning and STEM subjects. Check out the performance benchmarks below:

Source: https://mistral.ai/news/mathstral/

Observations

These benchmarks demonstrate Mathstral's strong capabilities across various mathematical domains and difficulty levels. Let's analyse these results:

- MATH Benchmark: The base performance of 56.6% on the MATH benchmark is already impressive for a 7B parameter model. Mistral AI says that Mathstral can achieve significantly better results with increased inference-time computation.

- GSM8K: The 77.1% score on GSM8K, a benchmark for grade school math word problems shows Mathstral’s proficiency in basic arithmetic and problem-solving. This capability is essential for educational applications and foundational mathematical reasoning.

- Odyssey Math, GRE Math, and AMC: These scores show Mathstral’s ability to handle increasingly complex mathematical concepts. The performance on GRE Math (56.9%) is particularly noteworthy as it indicates capability in handling graduate-level mathematical problems.

- AIME: While the score of 2/30 on the AIME 2024 benchmark might seem low, it's important to note that this is an extremely challenging competition even for top human mathematicians.

- Scalability: The model's ability to improve performance with additional inference-time computation (as seen in the MATH benchmark results) is a crucial feature. It allows you to balance speed and accuracy based on your specific needs and available resources.

Deploying Mathstral

Mathstral is designed as an instructed model so you can leverage its mathematical reasoning capabilities out of the box or fine-tune it for specific applications. Here's how you can get started with Mathstral:

-

Accessing the Model: The model weights are hosted on HuggingFace, providing easy access for researchers and developers.

-

Immediate Use: You can quickly deploy Mathstral using SDK mistral-inference for seamless integration into existing workflows or applications.

-

Customisation: For specialised use cases, mistral-finetune enables adaptation of Mathstral to specific mathematical domains or problem types using a codebase based on LoRA.

-

Infrastructure for Immediate Use and Customisation: On Hyperstack, you can set up an environment to run the Mathstral model. You can start with different types of cards, such as the NVIDIA RTX A6000, but for full-scale mistral inference, we recommend the NVIDIA L40 and NVIDIA A100 and for fine-tuning, the NVIDIA H100 PCIe and NVIDIA H100 SXM. You can easily download Mathstral from Hugging Face and load it into a web UI seamlessly on Hyperstack.

Sign up on Hyperstack Today to Lead AI Innovation!

Similar Reads

FAQs

What is Mathstral?

Mathstral is Mistral AI’s latest 7B parameter model specialising in mathematical reasoning and scientific discovery.

How does Mathstral perform on the MATH benchmark?

Mathstral achieves 56.6% base performance, improving to 74.59% with advanced mistral inference techniques.

Can Mathstral be fine-tuned for specific use cases?

Yes, Mathstral can be fine-tuned using mistral-finetune for specialised mathematical applications.

Where can I access Mathstral's model weights?

Mathstral's weights are hosted on HuggingFace for easy access and integration.

What license is Mathstral released under?

Mathstral is available under the Apache 2.0 license for academic and commercial use.

Subscribe to Hyperstack!

Enter your email to get updates to your inbox every week

Get Started

Ready to build the next big thing in AI?