TABLE OF CONTENTS

NVIDIA A100 GPUs On-Demand

The Qwen 2.5 Coder series is a cutting-edge AI model designed for code generation, repair and reasoning. With versions ranging from 0.5B to 32B parameters, the 32B model excels in code-related benchmarks, beating some open-source models in tasks such as code generation, multi-language repair and user preference alignment. It supports over 40 languages and is ideal for complex coding tasks. In this post, we'll explore how to deploy Qwen 2.5 Coder on Hyperstack and integrate it as a private coding assistant.

The latest Qwen 2.5 Coder series is a groundbreaking model in code generation, repair and reasoning in sizes ranging from 0.5B to a massive 32B parameter version. The 32B model achieves state-of-the-art performance across multiple benchmarks, matching and even surpassing some open-source models in tasks like code generation (EvalPlus, LiveCodeBench), multi-language repair (MdEval) and user preference alignment (Code Arena). This model is ideal for complex coding tasks across over 40 languages with unmatched precision and support for developers.

Read below how you can deploy the Qwen 2.5 Coder on Hyperstack. Also, we will show you how to integrate this LLM to work as your private coding assistant!

Why Deploy on Hyperstack?

Hyperstack is a cloud platform designed to accelerate AI and machine learning workloads. Here's why it's an excellent choice for deploying Qwen 2.5 Coder 32B Instruct:

- Availability: Hyperstack provides access to the latest and most powerful GPUs such as the NVIDIA A100 and the NVIDIA H100 SXM on-demand, specifically designed to handle large language models.

- Ease of Deployment: With pre-configured environments and one-click deployments, setting up complex AI models becomes significantly simpler on our platform.

- Scalability: You can easily scale your resources up or down based on your computational needs.

- Cost-Effectiveness: You pay only for the resources you use with our cost-effective cloud GPU pricing.

- Integration Capabilities: Hyperstack provides easy integration with popular AI frameworks and tools.

Deployment Process

Now, let's walk through the step-by-step process of deploying Qwen 2.5 Coder 32B Instruct on Hyperstack.

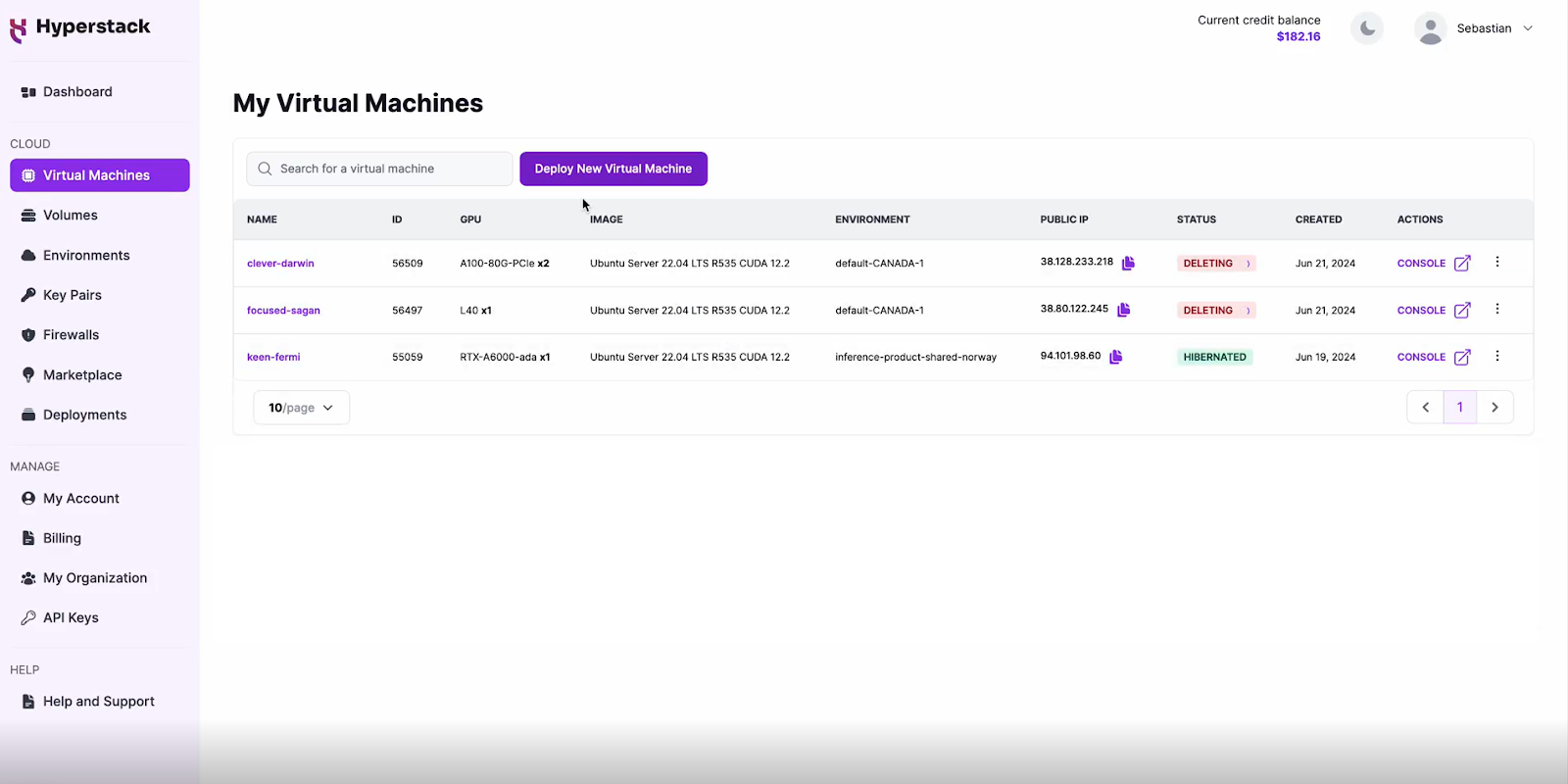

Step 1: Accessing Hyperstack

- Go to the Hyperstack website and log in to your account.

- If you're new to Hyperstack, you'll need to create an account and set up your billing information. Check our documentation to get started with Hyperstack.

- Once logged in, you'll be greeted by the Hyperstack dashboard, which provides an overview of your resources and deployments.

Step 2: Deploying a New Virtual Machine

Initiate Deployment

- Look for the "Deploy New Virtual Machine" button on the dashboard.

- Click it to start the deployment process.

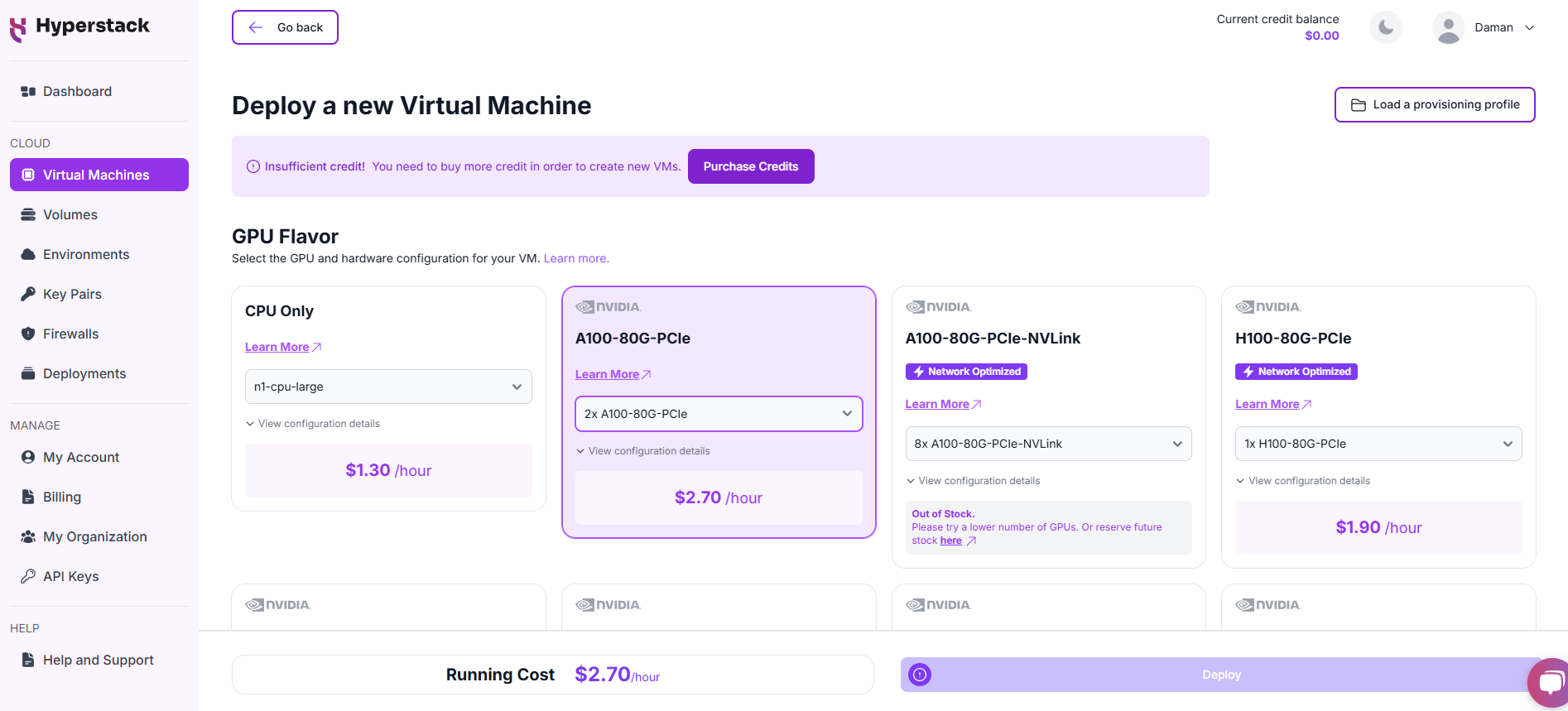

Select Hardware Configuration

- In the hardware options, choose the "2xA100-80G-PCIe" flavour.

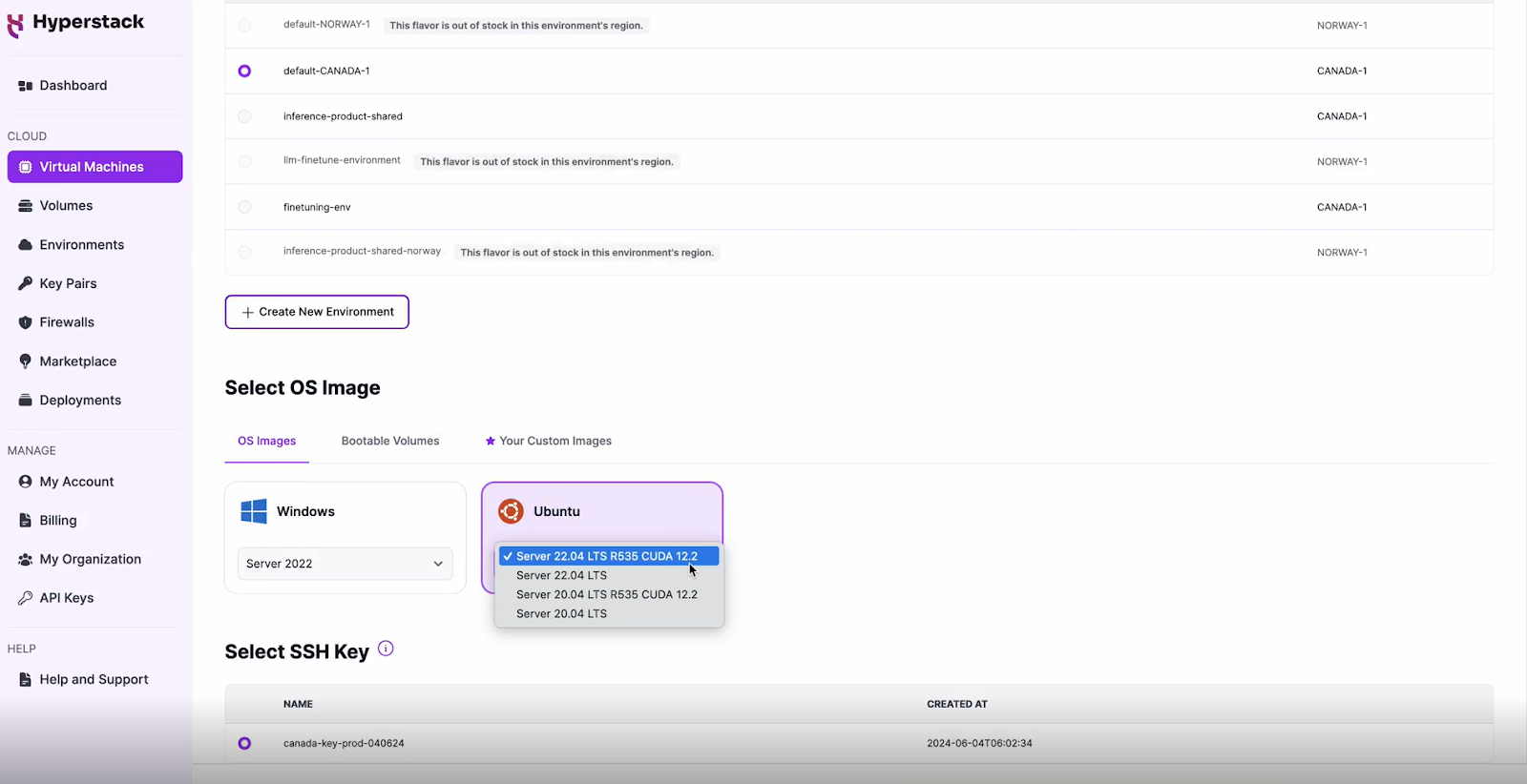

Choose the Operating System

- Select the "Server 22.04 LTS R535 CUDA 12.2 with Docker".

- This image comes pre-installed with Ubuntu 22.04 LTS and NVIDIA drivers (R535) along with CUDA 12.2, and Docker installed, providing an optimised environment for AI workloads.

Select a keypair

- Select one of the keypairs in your account. Don't have a keypair yet? See our Getting Started tutorial for creating one.

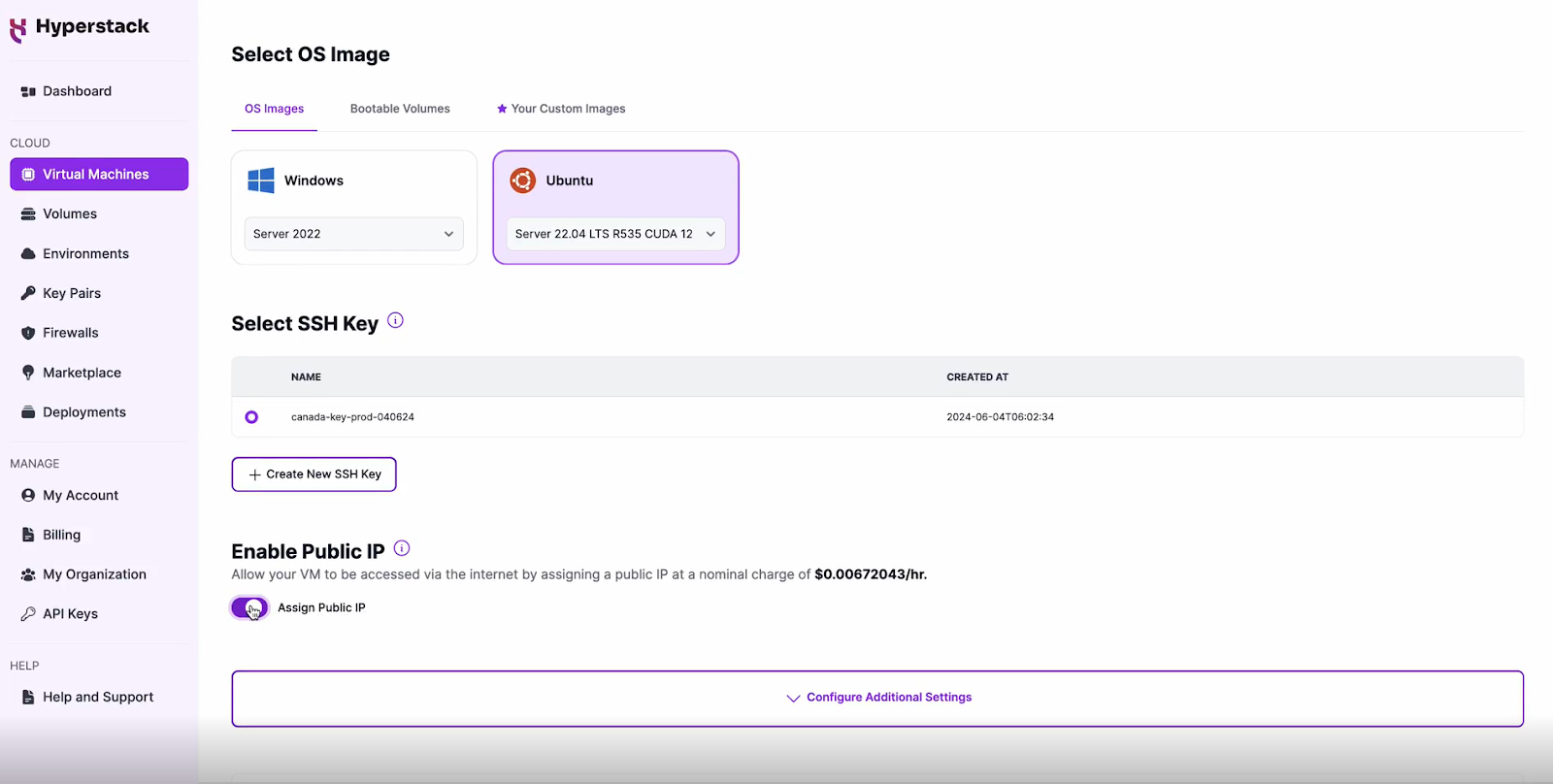

Network Configuration

- Ensure you assign a Public IP to your Virtual machine.

- This allows you to access your VM from the internet, which is crucial for remote management and API access.

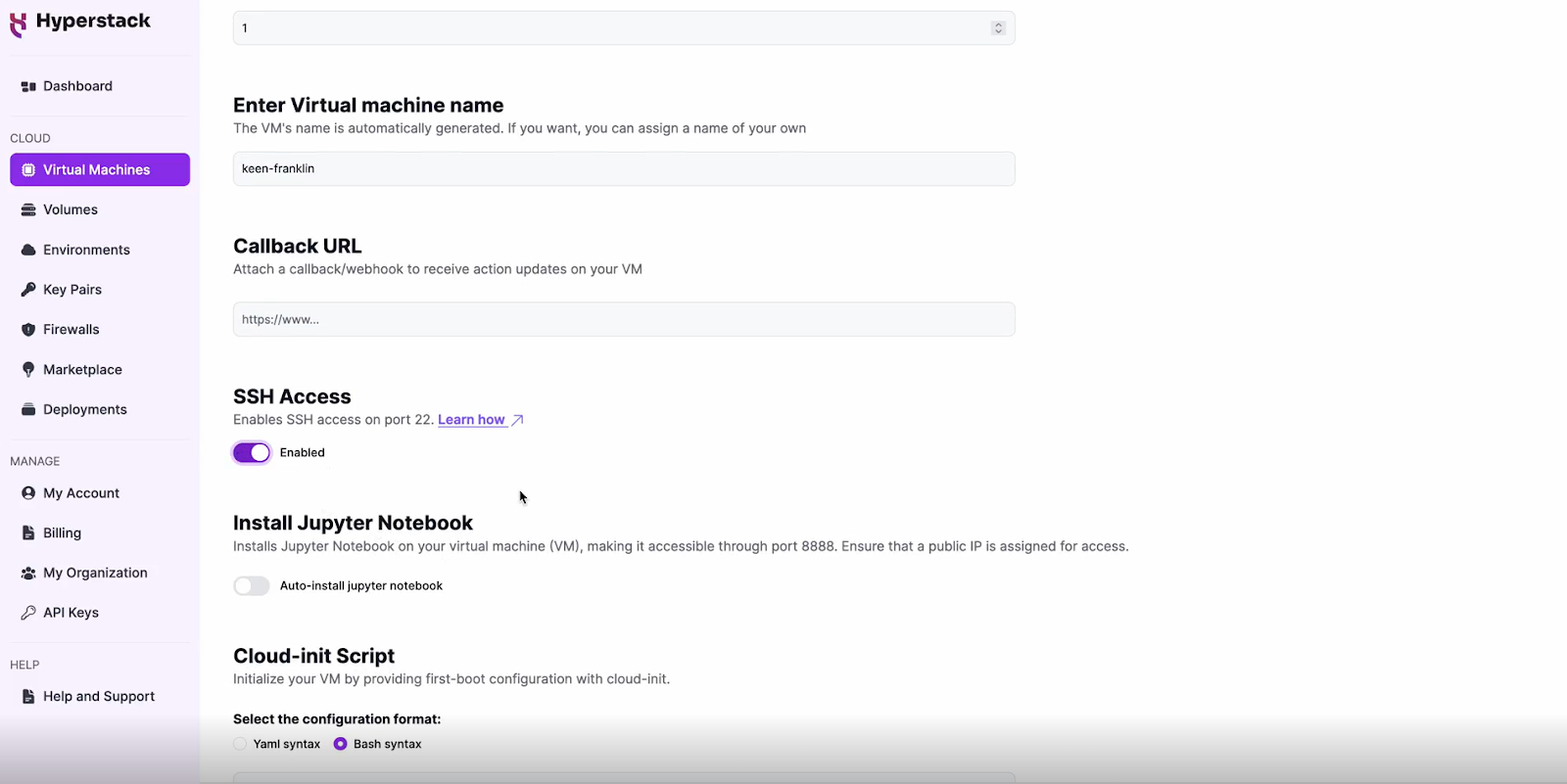

Enable SSH Access

- Make sure to enable an SSH connection.

- You'll need this to securely connect and manage your VM.

Configure Additional Settings

- Look for an "Additional Settings" or "Advanced Options" section.

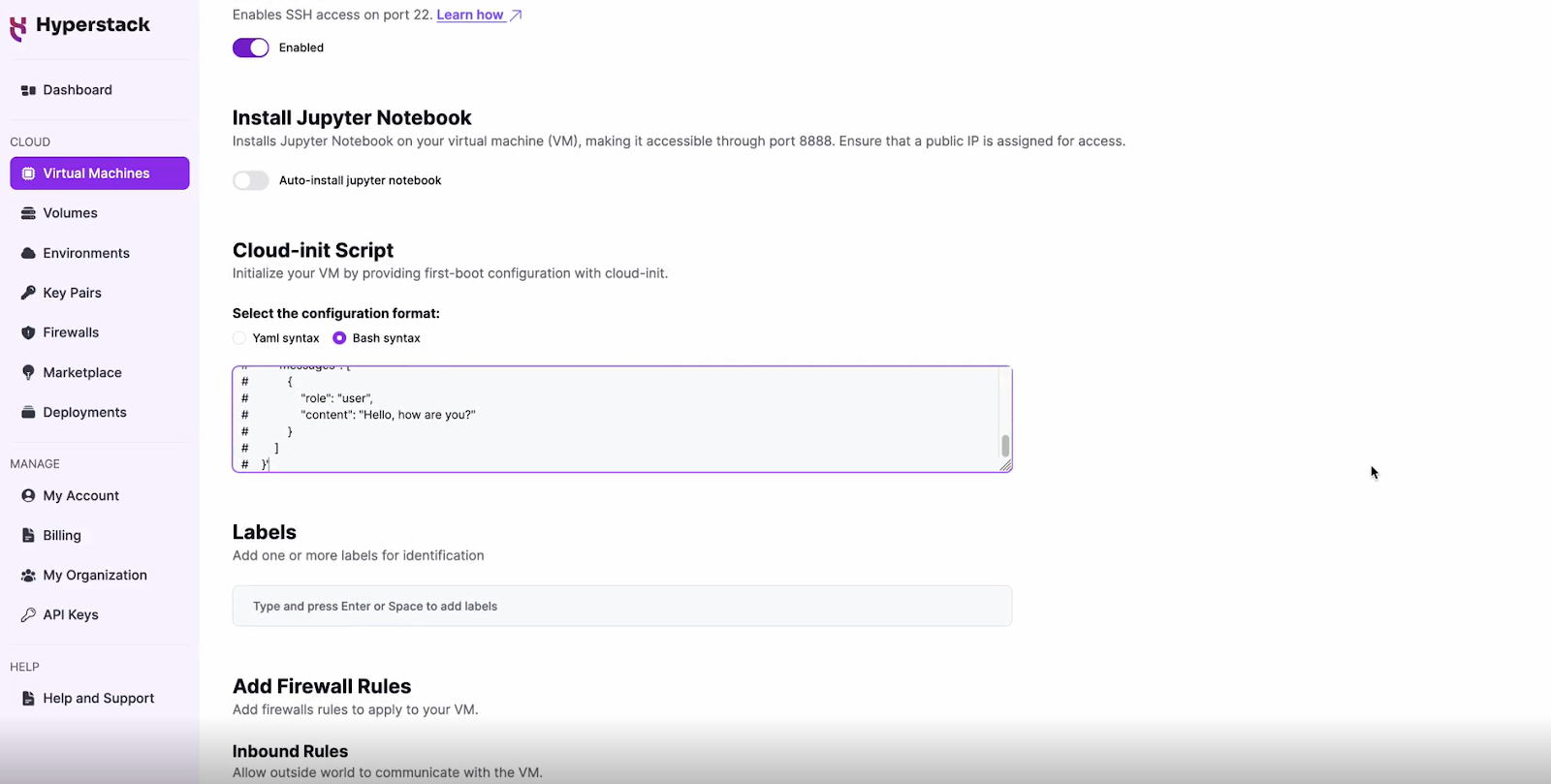

- Here, you'll find a field for cloud-init scripts. This is where you'll paste the initialisation script. Click here to get the cloud-init script!

- Ensure the script is in bash syntax. This script will automate the setup of your Qwen 2.5 Coder 32B Instruct environment.

DISCLAIMER: This tutorial will deploy the Qwen 2.5 Coder once for demo-ing purposes. For production environments, consider using production-grade deployments with API keys, secret management, monitoring etc.

Review and Deploy

- Double-check all your settings.

- Click the "Deploy" button to launch your virtual machine.

Step 3: Initialisation and Setup

After deploying your VM, the cloud-init script will begin its work. This process typically takes about 7 minutes. During this time, the script performs several crucial tasks:

- Dependencies Installation: Installs all necessary libraries and tools required to run Qwen 2.5 Coder 32B Instruct.

- Model Download: Fetches the Qwen 2.5 Coder 32B Instruct model files from the specified repository.

- API Setup: Configures the vLLM engine and sets up an OpenAI-compatible API endpoint on port 8000.

While waiting, you can prepare your local environment for SSH access and familiarise yourself with the Hyperstack dashboard.

Step 4: Accessing Your VM

Once the initialisation is complete, you can access your VM:

Locate SSH Details

- In the Hyperstack dashboard, find your VM's details.

- Look for the public IP address, which you will need to connect to your VM with SSH.

Connect via SSH

- Open a terminal on your local machine.

- Use the command ssh -i [path_to_ssh_key] [os_username]@[vm_ip_address] (e.g: ssh -i /users/username/downloads/keypair_hyperstack ubuntu@0.0.0.0.0)

- Replace username and ip_address with the details provided by Hyperstack.

Interacting with Qwen 2.5 Coder 32B Instruct

To access and experiment with Meta's latest model, SSH into your machine after completing the setup. If you are having trouble connecting with SSH, watch our recent platform tour video (at 4:08) for a demo. Once connected, use this API call on your machine to start using the Qwen 2.5 Coder 32B Instruct.

MODEL_NAME="Qwen/Qwen2.5-Coder-32B-Instruct"

curl -X POST http://localhost:8000/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": "'$MODEL_NAME'",

"messages": [

{

"role": "user",

"content": "Hi, how to write a Python function that prints \"Hyperstack is the greatest GPU Cloud platform\""

}

]

}'If the API is not working after ~10 minutes, please refer to our 'Troubleshooting Qwen 2.5 Coder 32B Instruct section below.

Troubleshooting Qwen 2.5 Coder 32B Instruct

If you are having any issues, you might need to restart your machine before calling the API:

-

Run

sudo rebootinside your VM -

Wait 5-10 minutes for the VM to reboot

-

SSH into your VM

-

Wait ~3 minutes for the LLM API to boot up

-

Run the above API call again

If you are still having issues, try:

-

Run

docker psand find the container_id of your API container -

Run

docker logs [container_id]to see the logs of your container -

Use the logs to debug any issues

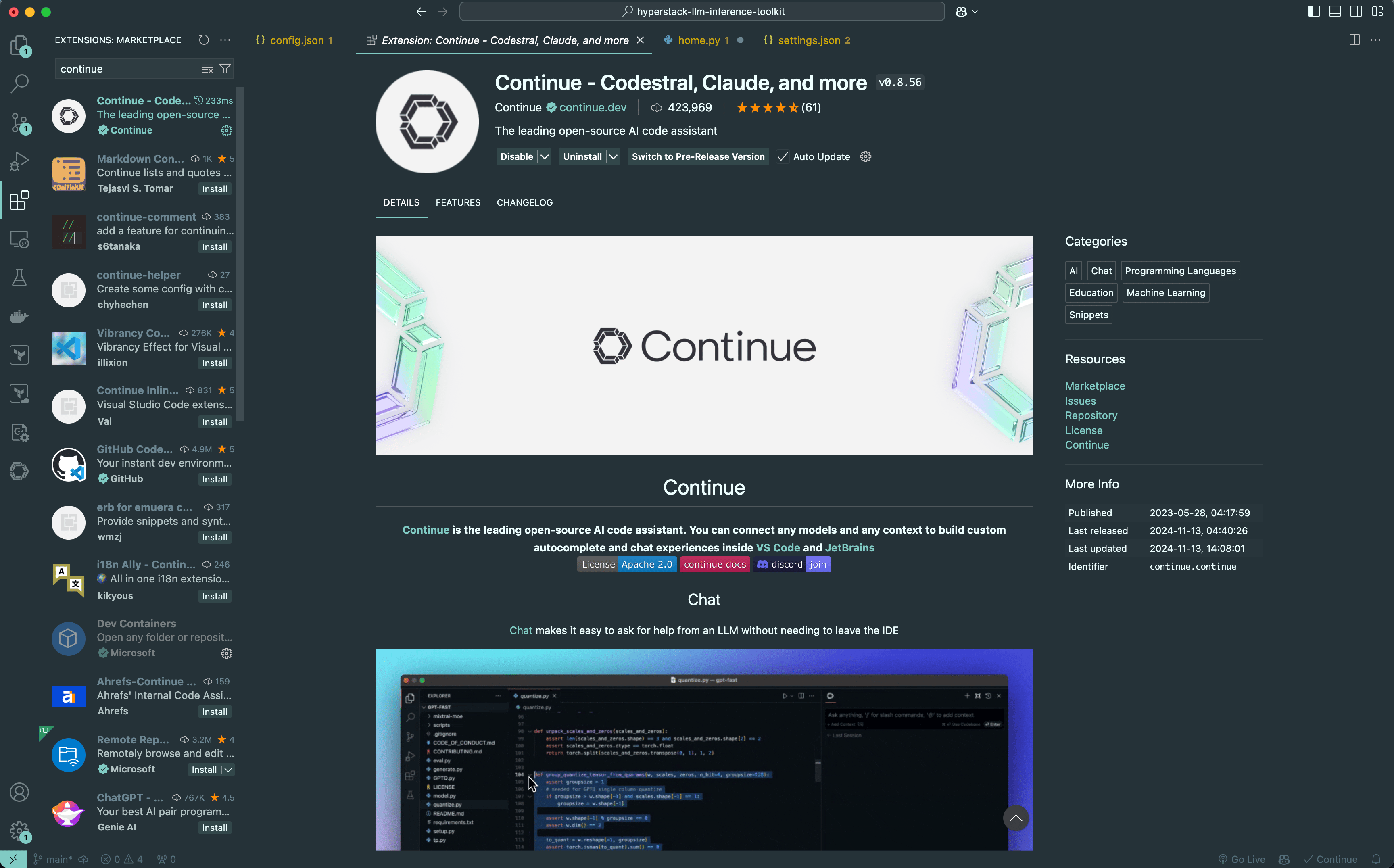

Integrating your self-hosted Qwen 2.5 Coder LLM in VScode

Using a self-hosted LLM as a coding assistant can ensure full data privacy and control, keeping sensitive code on your infrastructure without third-party exposure. If you'd like to integrate this self-hosted LLM with VSCode for code completions and code assistant chats, follow the instructions below.

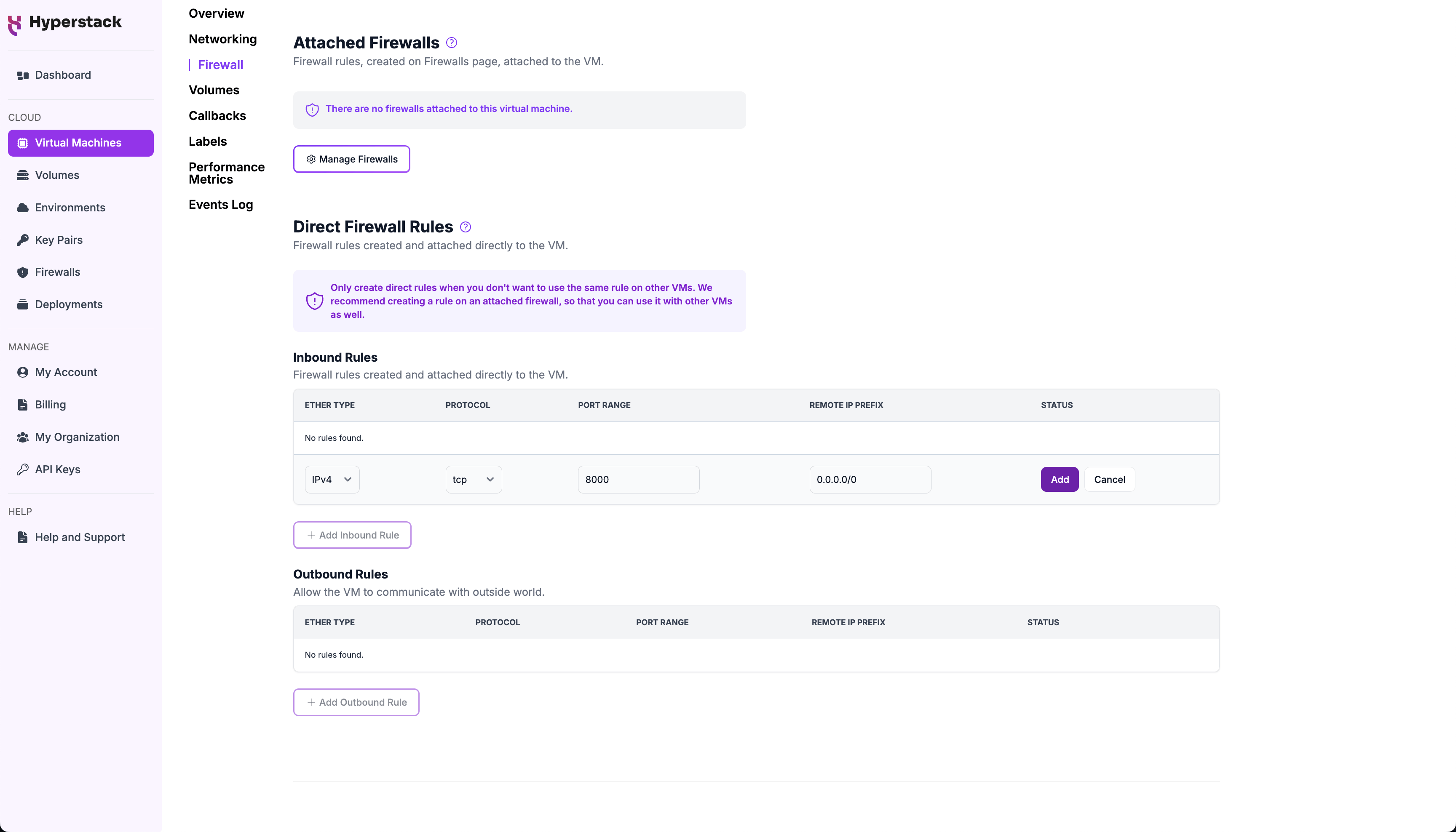

1. Open port 8000 on your machine: Follow the instructions [here] to open port 8000. Be aware that this will expose port 8000 to the public internet, allowing access to the dashboard via the public IP address and port number. If you prefer to limit access, you can configure your VM to restrict which IP addresses are permitted on port 8000.

2. Launch VSCode: Open your Visual Studio Code editor to proceed with the integration.

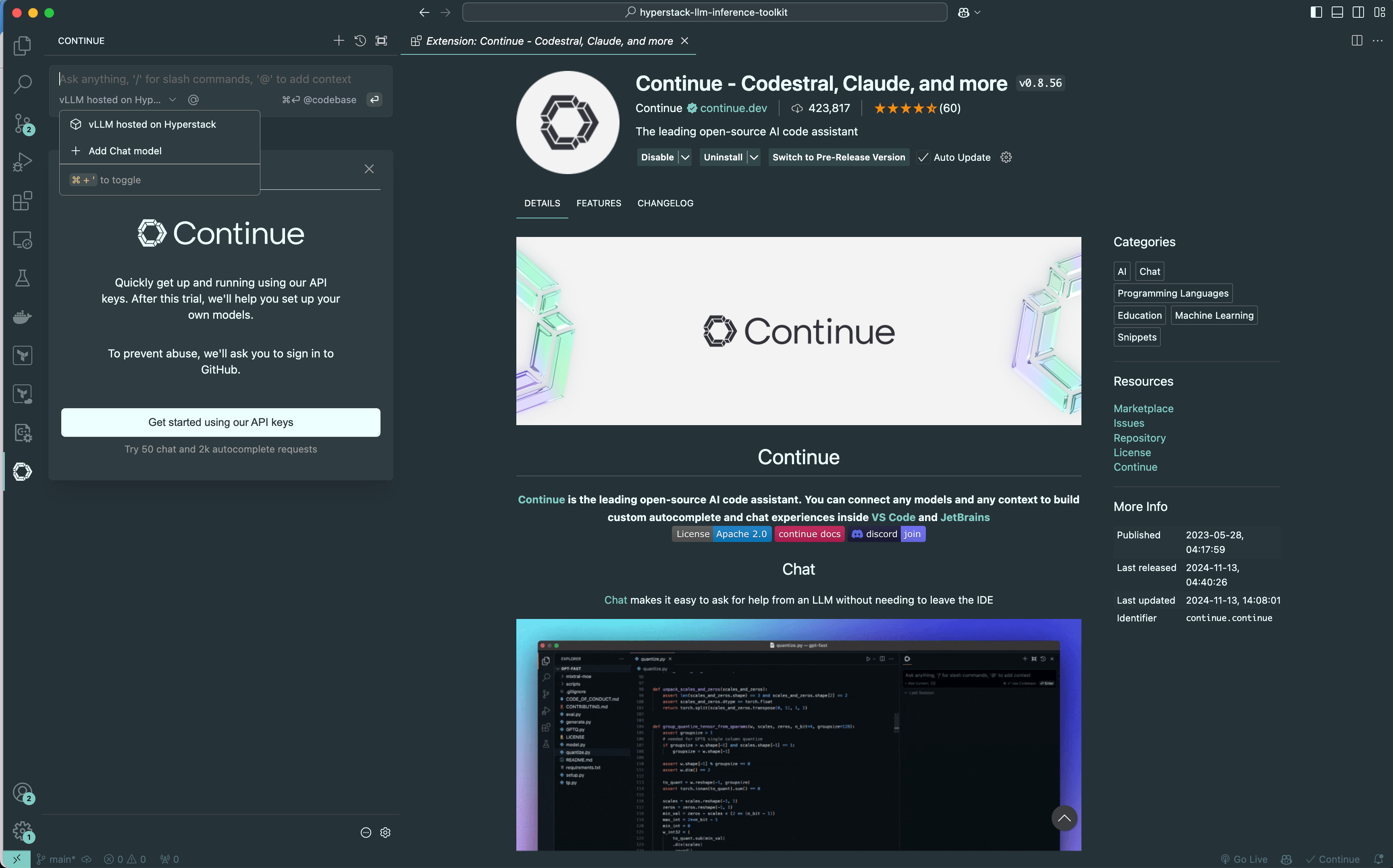

3. Install the 'Continue' extension: Go to the Extensions tab in VSCode and search for the 'Continue' extension. Install it to proceed with the setup.

4. Add the Chat model in the 'Continue' extension: On the left sidebar, click on the 'Continue' extension. At the top-left of the window, select ‘Add Chat model’ to start the configuration.

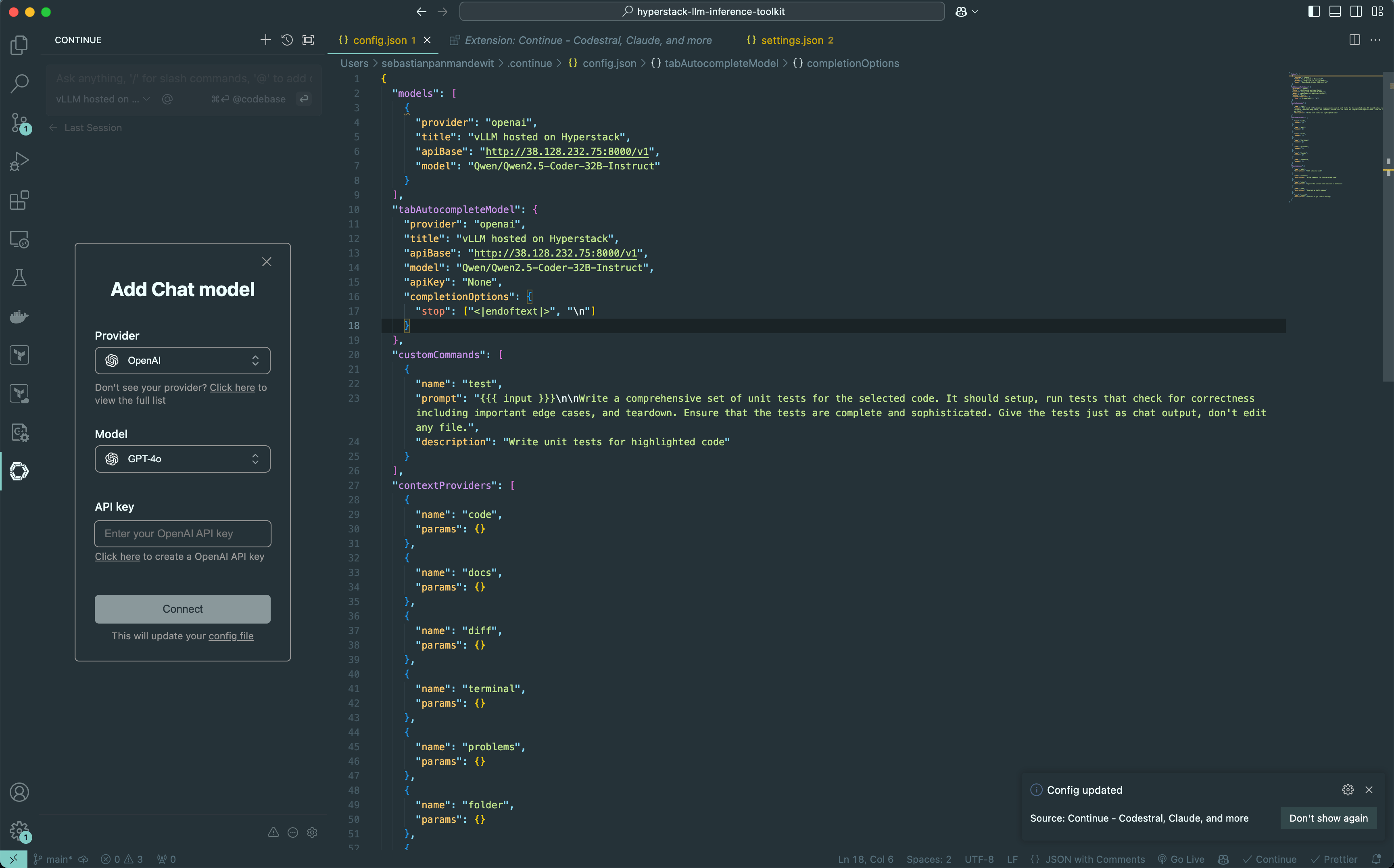

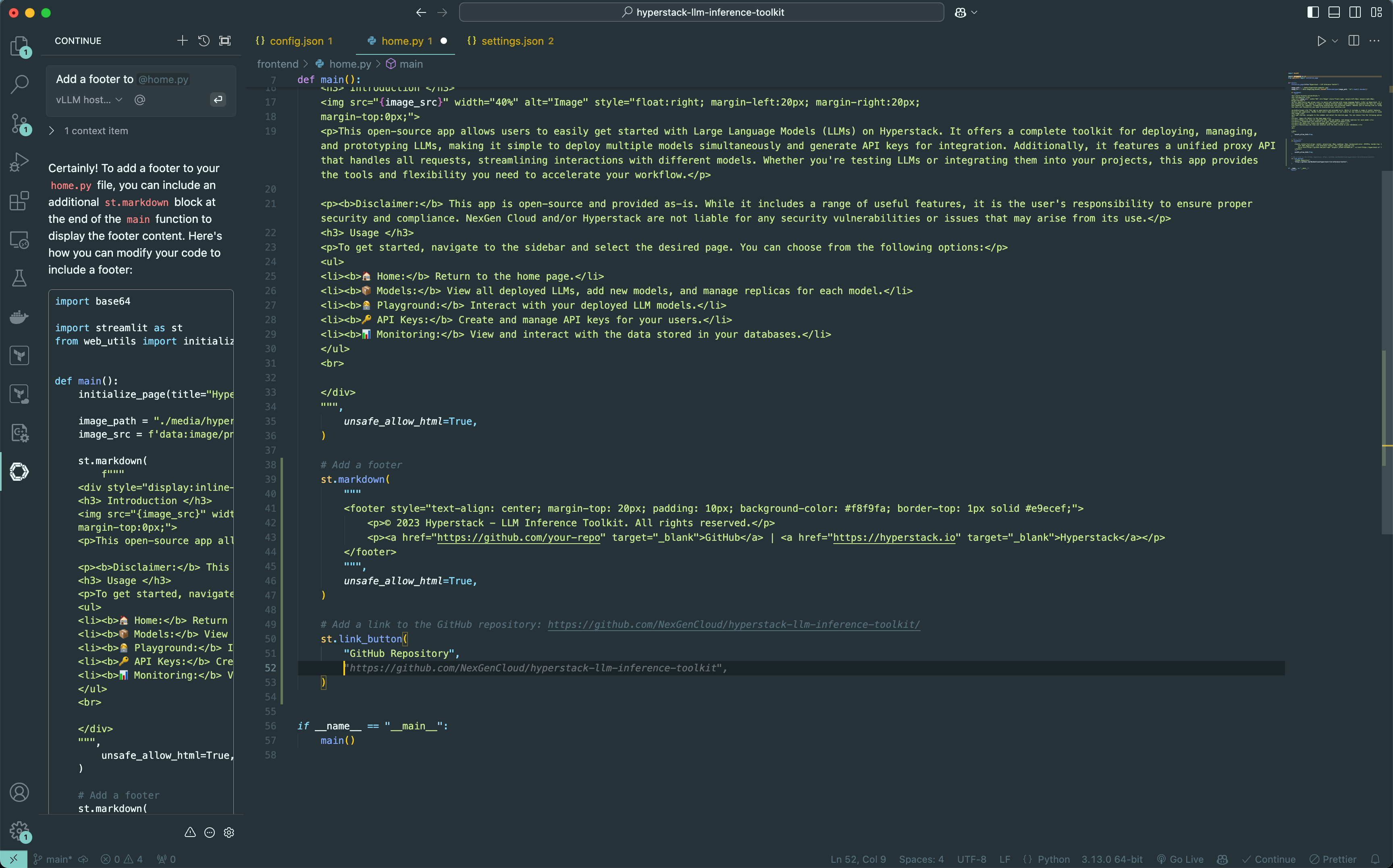

5. Modify the config file: In the configuration dialog, click the "config file" button at the bottom, which says, “This will update your config file.” This will allow you to edit the configuration file

6. Update the configs.json file: In the configs.json file, input your model information, replacing [public-ip] it with the public IP address of your Hyperstack VM. Don't forget to save the file by pressing CMD + S.

"models": [

{

"provider": "openai",

"title": "vLLM hosted on Hyperstack",

"apiBase": "http://[public-ip]:8000/v1",

"model": "Qwen/Qwen2.5-Coder-32B-Instruct"

}

],

"tabAutocompleteModel": {

"provider": "openai",

"title": "vLLM hosted on Hyperstack",

"apiBase": "http://[public-ip]:8000/v1",

"model": "Qwen/Qwen2.5-Coder-32B-Instruct",

"apiKey": "None",

"completionOptions": {

"stop": ["<|endoftext|>", "\n"]

}

},.png?width=3456&height=2152&name=step06%20(1).png)

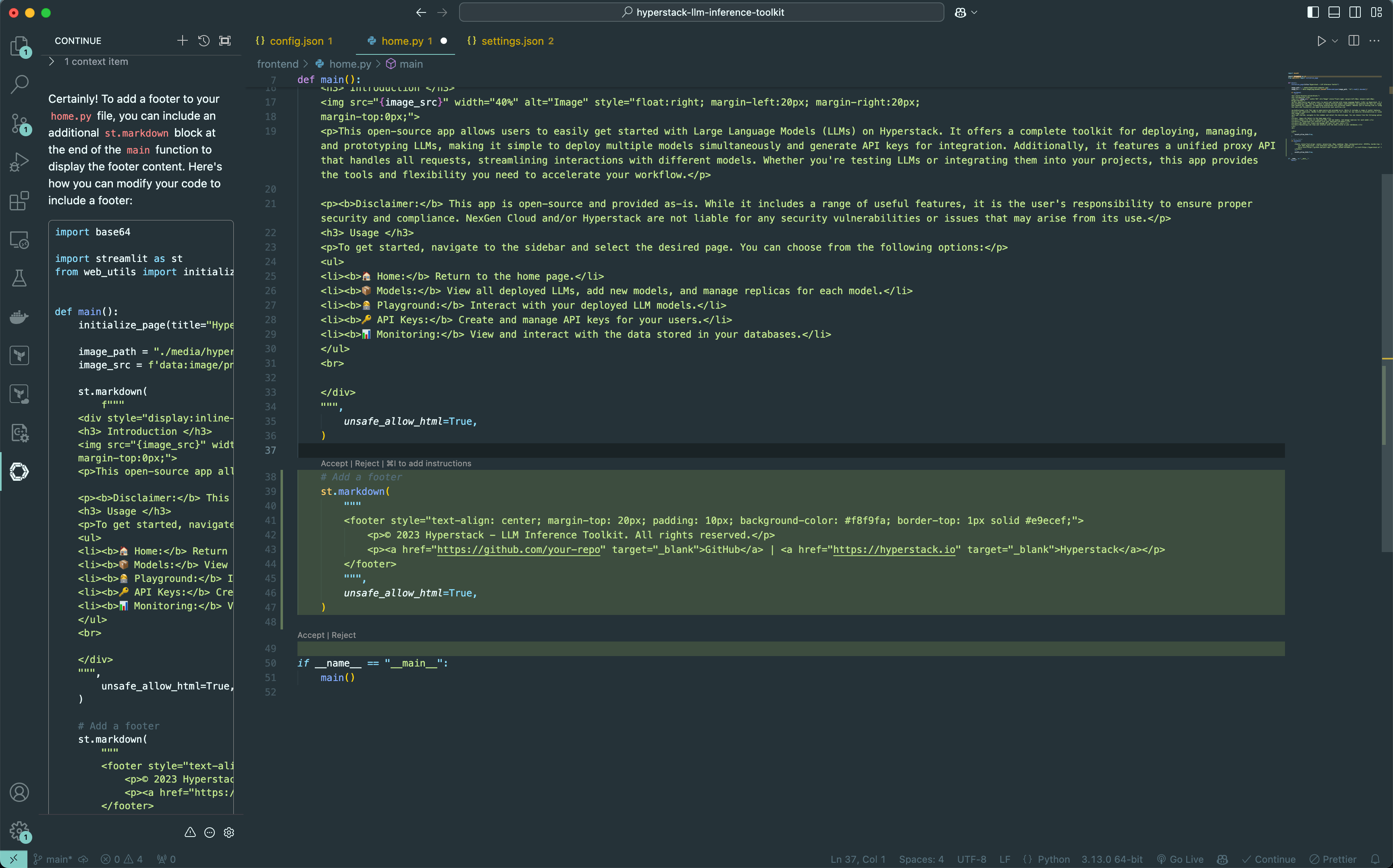

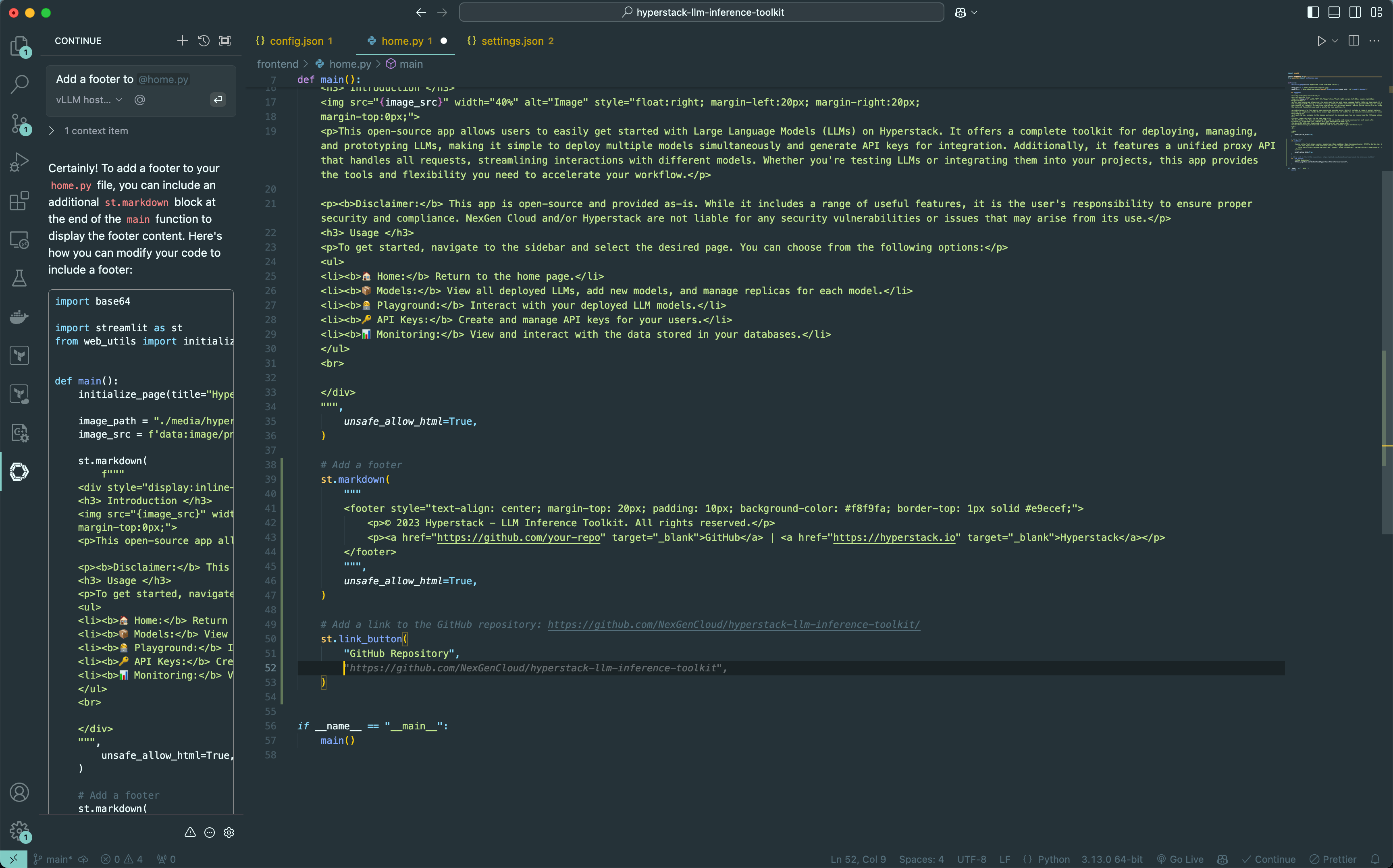

7. Interact with the self-hosted LLM: On the left sidebar, you'll now be able to chat with your self-hosted LLM. Refer to the attached image for a sample chat interface.

8. Accept code suggestions: When you receive code suggestions in the chat, click the '>' icon at the top of the suggestion. This will apply the changes to your file, highlighting them in green. An example of this is shown in the attached image.

9. Accept code insertions: If you want to accept a code insertion, simply click the 'Accept' label above the inserted code.

10. Enable auto-completion in VSCode settings: To use the auto-complete functionality, add the necessary configuration lines to your VSCode settings file. Open the settings by pressing CTRL + SHIFT + P and selecting settings.json, then add the required lines for auto-complete.

"github.copilot.editor.enableAutoCompletions": false,

"editor.inlineSuggest.enabled": true,

"continue.enableTabAutocomplete": true

With these steps, you're all set to enjoy a fully integrated private coding assistant within VSCode, running on your infrastructure in Hyperstack. This setup ensures full control over your data while providing you with powerful AI-driven code suggestions and completions. We wish you all the best as you boost your development workflow with Qwen 2.5 Coder with Hyperstack.

Step 5: Hibernating Your VM

When you're finished with your current workload, you can hibernate your VM to avoid incurring unnecessary costs:

- In the Hyperstack dashboard, locate your Virtual machine.

- Look for a "Hibernate" option.

- Click to hibernate the VM, which will stop billing for compute resources while preserving your setup.

To continue your work without repeating the setup process:

- Return to the Hyperstack dashboard and find your hibernated VM.

- Select the "Resume" or "Start" option.

- Wait a few moments for the VM to become active.

- Reconnect via SSH using the same credentials as before.

FAQs

What is Qwen 2.5 Coder 32B Instruct?

It’s a large language model optimised for complex code generation, repair, and multi-language support.

How can I deploy Qwen 2.5 Coder 32B Instruct on Hyperstack?

Simply follow the deployment steps in our Hyperstack guide for quick setup.

What GPUs are recommended for running Qwen 2.5 Coder 32B Instruct?

NVIDIA A100 and H100 GPUs are ideal for handling the demands of this model.

Can I integrate Qwen 2.5 Coder 32B Instruct with VSCode?

Yes, you can set up the model for code assistance in VSCode using Hyperstack integration steps.

Is Qwen 2.5 Coder 32B Instruct suitable for production use?

Yes, but we recommend adding production-grade features like API keys and monitoring for optimal performance.

Similar Reads:

Subscribe to Hyperstack!

Enter your email to get updates to your inbox every week

Get Started

Ready to build the next big thing in AI?