TABLE OF CONTENTS

NVIDIA H100 SXM On-Demand

Scaling AI without overspending sounds like a dream because the rising expense of AI infrastructure is a significant barrier. Research by Epoch AI shows that the cost of training AI models has become much higher in the past year. For example, OpenAI’s ChatGPT-4 released in March 2023 and Google’s Gemini launched in December, are two prominent models that have significantly increased the financial burden of AI development. According to Epoch AI, training these advanced systems has been far more expensive than previous models. The cost of training Gemini ranged from $30 million to $191 million. For OpenAI’s ChatGPT-4, the cost was between $41 million and $78 million, although CEO Sam Altman has indicated that the total cost exceeded $100 million, confirming the research’s accuracy.

Although most companies are not training LLMs as massive as ChatGPT, the costs can still be significant. Many are fine-tuning existing models or training large foundational models for specific use cases, while more affordable they still require considerable resources. As AI adoption grows, even these smaller-scale projects can become costly.

But how do businesses balance the need for powerful AI computing resources while controlling costs? Continue reading as we discuss how to control AI compute costs with Hyperstack.

5 Tips to Reduce AI Compute Costs

Here’s how Hyperstack can help reduce AI compute costs while maintaining the performance needed for AI-driven solutions.

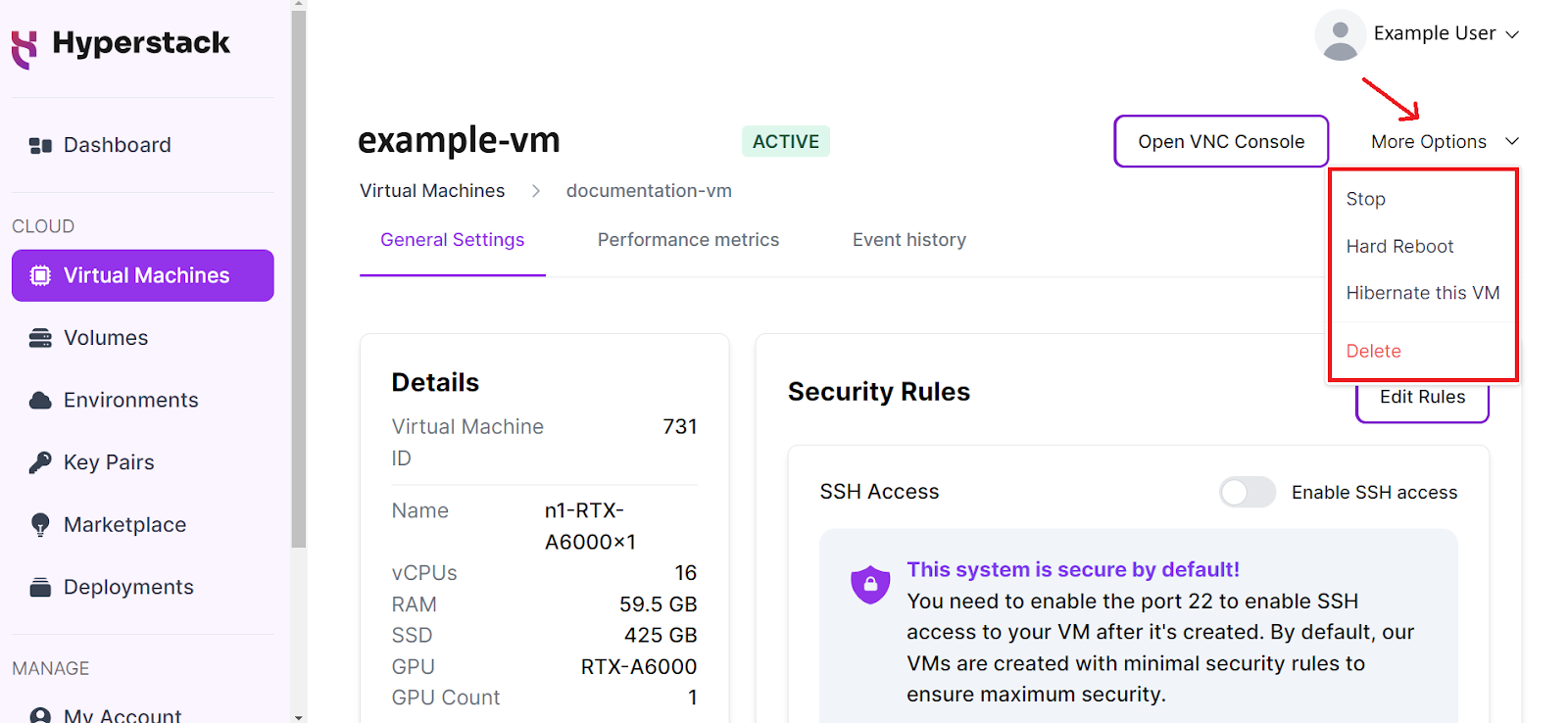

1. Hibernate VMs When Possible

One of the simplest and most effective ways to cut down on AI compute costs is to hibernate resources when they are not in use. Hyperstack's virtual machine hibernation feature allows you to pause the operations of virtual machines when they're idle. By doing so, you can save on unnecessary compute costs, ensuring that resources are only being consumed when necessary.

Hibernation not only reduces costs but also helps in better resource management. For instance, you can schedule hibernation during non-peak hours or periods of low activity, thereby optimising your usage patterns. This feature is particularly beneficial for businesses with fluctuating compute demands, as it allows them to align their resource consumption with actual usage needs.

2. Select the Right GPU Flavour for Your Workload

Choosing the right GPU flavour for your AI workloads is crucial for optimising performance and cost-efficiency. Our LLM GPU selector tool helps you identify the most suitable GPU configurations based on your specific needs. Whether you're running inference tasks or training large models, selecting the appropriate GPU can significantly impact your compute costs.

Try our LLM GPU Selector to Find the Right GPU for Your Needs

Our GPU selector takes into account factors such as memory requirements, model type, training options and more to provide you with tailored recommendations. By leveraging this tool, you can ensure that you're not overusing resources, hence maximising the cost-effectiveness of your AI initiatives.

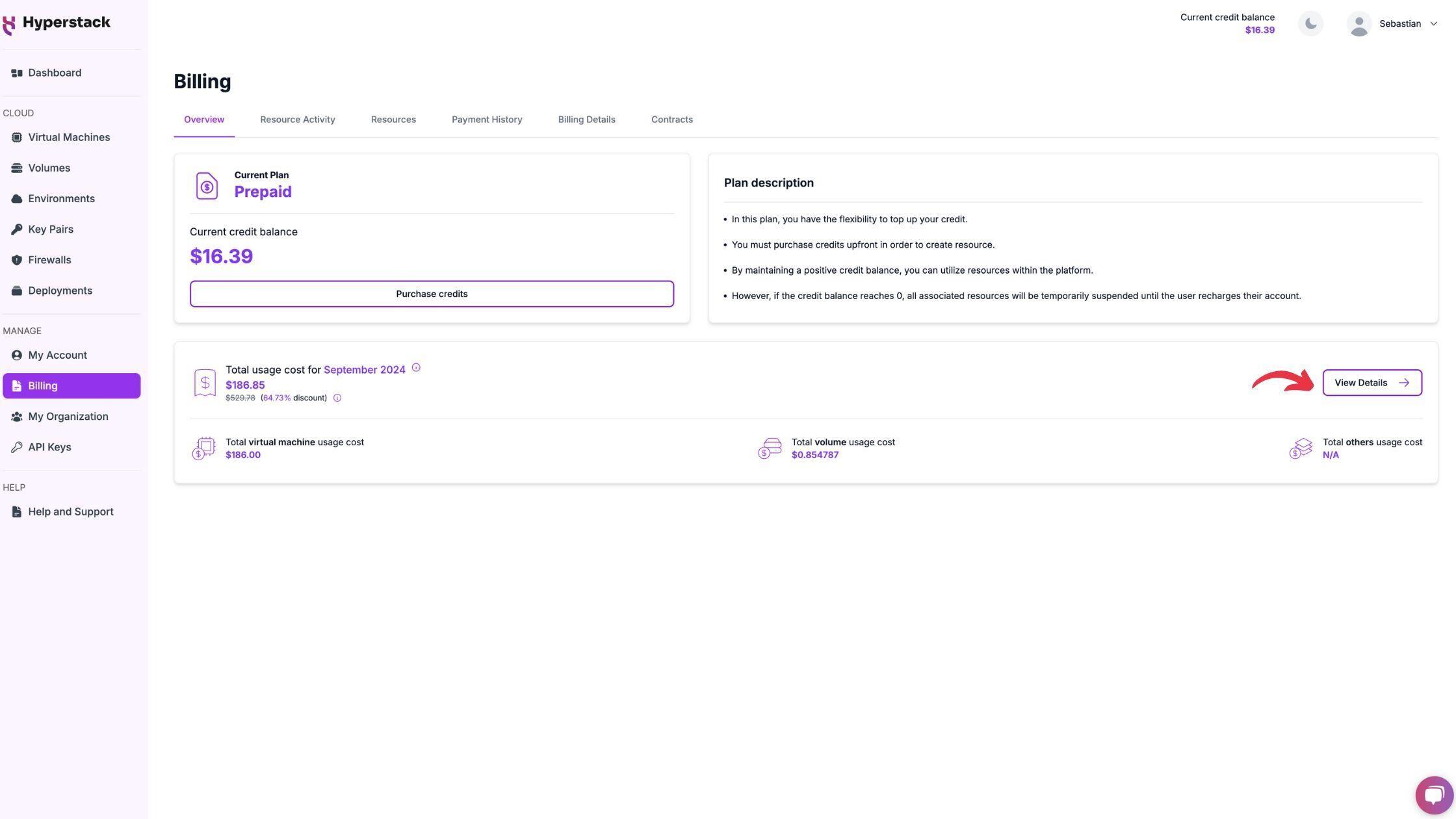

3. Take Advantage of Prepaid Credits

Hyperstack provides flexible prepaid credit options that allow you to purchase compute resources upfront. By default, all customer accounts follow a prepaid model where users pay in advance based on their resource requirements. This approach helps customers manage costs more effectively by aligning payments with their projected usage, ensuring they have the necessary compute power when they need it without unexpected charges.

4. Monitor Spending with the New Billing UI

Keeping track of your AI compute expenses is essential for identifying cost-saving opportunities. With Hyperstack's new Usage Cost Summary feature, you can now get a clear overview of your monthly usage costs across virtual machines, volumes, and other resources. This summary, found under the Billing Overview tab, provides a quick snapshot of your current spending, helping you stay on top of your budget.

For more detailed insights, a new View Details button allows users to seamlessly navigate to the Resource Activity tab. Here, you'll find graphical representations of usage costs over time, making it easier to track trends and pinpoint which resources are consuming the most budget. Users can adjust the date range to view costs over a specified period, ensuring flexible analysis.

The Resource Activity tab also breaks down the costs of virtual machines by sub-resources, including GPU, CPU, RAM, root disk, ephemeral storage, and public IP address. This granular view allows you to better understand the specific components driving your compute costs, providing transparency and control over your cloud spending. By leveraging these insights, users can make informed decisions to optimise resource usage and reduce overall costs.

5. Use CPUs for Quick Testing

While GPUs are essential for intensive AI tasks, not all operations require such high-performance computing resources. Using CPUs instead of GPUs can result in substantial cost savings for quick testing and prototyping. Hyperstack provides the flexibility to switch between CPU and GPU instances, allowing you to choose the most cost-effective option based on the nature of the task.

By utilising CPUs for preliminary testing and development, you can minimise unnecessary GPU usage and associated costs. Once your models and algorithms are refined, you can then switch to GPUs for final training and deployment. This approach ensures that you're leveraging the right resources at the right time, optimising both performance and cost-efficiency.

Conclusion

At Hyperstack, cost optimisation doesn’t mean sacrificing power. It means making intelligent, data-driven decisions that ensure businesses can leverage AI to its fullest potential without budget constraints. With these strategies, you can drive innovation while staying within your financial goals and ensuring the long-term success of your AI projects. Ready to take control of your AI compute costs? Start exploring Hyperstack's cost-saving features today.

Explore our latest tutorials to get started with Hyperstack:

FAQs

How does hibernation help reduce AI compute costs

Hibernation pauses unused virtual machines, saving costs by only consuming resources when needed.

Can I track my AI compute costs with Hyperstack?

Yes, Hyperstack’s Billing UI provides detailed insights into your resource usage and costs.

How does selecting the right GPU help reduce AI compute costs?

Choosing the right GPU reduces AI compute costs by optimising resource allocation, minimising idle time, and speeding up task completion. This leads to lower usage charges and more efficient workflows. Check out our guide to choosing the right GPU for LLM for your AI workloads.

Subscribe to Hyperstack!

Enter your email to get updates to your inbox every week

Get Started

Ready to build the next big thing in AI?